New virtual reality array allows immersive experience without the disorienting 3-D goggles

The University of Pennsylvania has installed a virtual reality system that allows a participant full-body interaction with a virtual environment without the hassle of bulky, dizzying 3-D glasses. The system will be demonstrated for journalists and others Thursday, May 15.

Key to the installation, dubbed LiveActor, is the pairing of an optical motion capture system to monitor the body’s movements with a stereo projection system to immerse users in a virtual environment. The combination lets users interact with characters embedded within virtual worlds.

“Traditional virtual reality experiences offer limited simulations and interactions through tracking of a few sensors mounted on the body,” said Norman I. Badler, professor of computer and information science and director of Penn’s Center for Human Modeling and Simulation. “LiveActor permits whole-body tracking and doesn’t require clunky 3-D goggles, resulting in a more realistic experience.”

LiveActor users wear a special suit that positions 30 sensors on different parts of the body. As the system tracks the movement of these sensors as an actor moves around a stage roughly 10 feet by 20 feet in size, a virtual character — such as a dancing, computer-generated Ben Franklin, Penn’s founder — can recreate the user’s movements with great precision and without a noticeable time lag. The system can also project images onto the array of screens surrounding the LiveActor stage, allowing users to interact with a bevy of virtual environments.

LiveActor’s creators envision an array of applications and plan to make the system available to companies and researchers. Undergraduates have already used LiveActor to create a startlingly realistic but completely imaginary 3-D chapel. The array could be used to generate footage of virtual characters in movies, sidestepping arduous frame-by-frame drawing. LiveActor could also help those with post-traumatic stress disorder face their fears in a comfortable, controlled environment.

“The system is much more than the sum of its parts,” Badler said. “Motion capture has traditionally been used for animation, game development and human performance analysis, but with LiveActor users can delve deeper into virtual worlds. The system affords a richer set of interactions with both characters and objects in the virtual environment.”

While stereo projection systems have in the past been limited to relatively static observation and navigation — such as architectural walk-throughs, games and medical visualizations — LiveActor can be used to simulate nearly any environment or circumstance, chart user reactions and train users to behave in new ways. Unlike actual humans, virtual characters can be scripted to behave consistently in a certain way.

LiveActor was made possible through a grant from the National Science Foundation with matching funding by Penn’s School of Engineering and Applied Science, as well as equipment grants from Ascension Technology Corporation and EON Reality.

Media Contact

More Information:

http://www.upenn.edu/All latest news from the category: Communications Media

Engineering and research-driven innovations in the field of communications are addressed here, in addition to business developments in the field of media-wide communications.

innovations-report offers informative reports and articles related to interactive media, media management, digital television, E-business, online advertising and information and communications technologies.

Newest articles

A universal framework for spatial biology

SpatialData is a freely accessible tool to unify and integrate data from different omics technologies accounting for spatial information, which can provide holistic insights into health and disease. Biological processes…

How complex biological processes arise

A $20 million grant from the U.S. National Science Foundation (NSF) will support the establishment and operation of the National Synthesis Center for Emergence in the Molecular and Cellular Sciences (NCEMS) at…

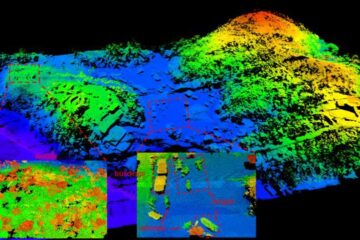

Airborne single-photon lidar system achieves high-resolution 3D imaging

Compact, low-power system opens doors for photon-efficient drone and satellite-based environmental monitoring and mapping. Researchers have developed a compact and lightweight single-photon airborne lidar system that can acquire high-resolution 3D…