Computer program looks five minutes into the future

When will you do what? Prof. Jürgen Gall (right) and Yazan Abu Farha from the Institute of Computer Science at the University of Bonn. © Photo: Barbara Frommann/Uni Bonn

The perfect butler, as every fan of British social drama knows, has a special ability: He senses his employer’s wishes before they have even been uttered. The working group of Prof. Dr. Jürgen Gall wants to teach computers something similar: “We want to predict the timing and duration of activities – minutes or even hours before they happen”, he explains.

A kitchen robot, for example, could then pass the ingredients as soon as they are needed, pre-heat the oven in time – and in the meantime warn the chef if he is about to forget a preparation step. The automatic vacuum cleaner meanwhile knows that it has no business in the kitchen at that time, and instead takes care of the living room.

We humans are very good at anticipating the actions of others. For computers however, this discipline is still in its infancy. The researchers at the Institute of Computer Science at the University of Bonn are now able to announce a first success: They have developed self-learning software that can estimate the timing and duration of future activities with astonishing accuracy for periods of several minutes.

Training data: four hours of salad videos

The training data used by the scientists included 40 videos in which performers prepare different salads. Each of the recordings was around 6 minutes long and contained an average of 20 different actions. The videos also contained precise details of what time the action started and how long it took.

The computer “watched” these salad videos totaling around four hours. This way, the algorithm learned which actions typically follow each other during this task and how long they last. This is by no means trivial: After all, every chef has his own approach. Additionally, the sequence may vary depending on the recipe.

“Then we tested how successful the learning process was”, explains Gall. “For this we confronted the software with videos that it had not seen before.” At least the new short films fit into the context: They also showed the preparation of a salad. For the test, the computer was told what is shown in the first 20 or 30 percent of one of the new videos. On this basis it then had to predict what would happen during the rest of the film.

That worked amazingly well. Gall: “Accuracy was over 40 percent for short forecast periods, but then dropped the more the algorithm had to look into the future.” For activities that were more than three minutes in the future, the computer was still right in 15 percent of cases. However, the prognosis was only considered correct if both the activity and its timing were correctly predicted.

Gall and his colleagues want the study to be understood only as a first step into the new field of activity prediction. Especially since the algorithm performs noticeably worse if it has to recognize on its own what happens in the first part of the video, instead of being told. Because this analysis is never 100 percent correct – Gall speaks of “noisy” data. “Our process does work with it”, he says. “But unfortunately nowhere near as well.”

The study was developed as part of a research group dedicated to the prediction of human behavior and financially supported by the German Research Foundation (DFG).

Publication: Yazan Abu Farha, Alexander Richard and Jürgen Gall: When will you do what? – Anticipating Temporal Occurrences of Activities. IEEE Conference on Computer Vision and Pattern Recognition 2018; http://pages.iai.uni-bonn.de/gall_juergen/download/jgall_anticipation_cvpr18.pdf

Sample test videos and predictions derived from them are available at https://www.youtube.com/watch?v=xMNYRcVH_oI

Contact:

Prof. Dr. Jürgen Gall

Institute of Computer Science

University of Bonn

Tel. +49(0)228/7369600

E-mail: gall@informatik.uni-bonn.de

Media Contact

More Information:

http://www.uni-bonn.de/All latest news from the category: Information Technology

Here you can find a summary of innovations in the fields of information and data processing and up-to-date developments on IT equipment and hardware.

This area covers topics such as IT services, IT architectures, IT management and telecommunications.

Newest articles

A universal framework for spatial biology

SpatialData is a freely accessible tool to unify and integrate data from different omics technologies accounting for spatial information, which can provide holistic insights into health and disease. Biological processes…

How complex biological processes arise

A $20 million grant from the U.S. National Science Foundation (NSF) will support the establishment and operation of the National Synthesis Center for Emergence in the Molecular and Cellular Sciences (NCEMS) at…

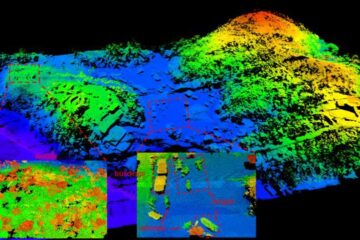

Airborne single-photon lidar system achieves high-resolution 3D imaging

Compact, low-power system opens doors for photon-efficient drone and satellite-based environmental monitoring and mapping. Researchers have developed a compact and lightweight single-photon airborne lidar system that can acquire high-resolution 3D…