Computational model sheds light on how the brain recognizes objects

Researchers at MIT’s McGovern Institute for Brain Research have developed a new mathematical model to describe how the human brain visually identifies objects. The model accurately predicts human performance on certain visual-perception tasks, which suggests that it’s a good indication of what actually happens in the brain, and it could also help improve computer object-recognition systems.

The model was designed to reflect neurological evidence that in the primate brain, object identification — deciding what an object is — and object location — deciding where it is — are handled separately. “Although what and where are processed in two separate parts of the brain, they are integrated during perception to analyze the image,” says Sharat Chikkerur, lead author on a paper appearing this week in the journal Vision Research, which describes the work. “The model that we have tries to explain how this information is integrated.”

The mechanism of integration, the researchers argue, is attention. According to their model, when the brain is confronted by a scene containing a number of different objects, it can’t keep track of all of them at once. So instead it creates a rough map of the scene that simply identifies some regions as being more visually interesting than others. If it’s then called upon to determine whether the scene contains an object of a particular type, it begins by searching — turning its attention toward — the regions of greatest interest.

Chikkerur and Tomaso Poggio, the Eugene McDermott Professor in the Department of Brain and Cognitive Sciences and at the Computer Science and Artificial Intelligence Laboratory, together with graduate student Cheston Tan and former postdoc Thomas Serre, implemented the model in software, then tested its predictions against data from experiments with human subjects. The subjects were asked first to simply regard a street scene depicted on a computer screen, then to count the cars in the scene, and then to count the pedestrians, while an eye-tracking system recorded their eye movements. The software predicted with great accuracy which regions of the image the subjects would attend to during each task.

The software’s analysis of an image begins with the identification of interesting features — rudimentary shapes common to a wide variety of images. It then creates a map that depicts which features are found in which parts of the image. But thereafter, shape information and location information are processed separately, as they are in the brain.

The software creates a list of all the interesting features in the feature map, and from that, it creates another list, of all the objects that contain those features. But it doesn’t record any information about where or how frequently the features occur.

At the same time, it creates a spatial map of the image that indicates where interesting features are to be found, but not what sorts of features they are.

It does, however, interpret the “interestingness” of the features probabilistically. If a feature occurs more than once, its interestingness is spread out across all the locations at which it occurs. If another feature occurs at only one location, its interestingness is concentrated at that one location.

Mathematically, this is a natural consequence of separating information about objects’ identity and location and interpreting the results probabilistically. But it ends up predicting another aspect of human perception, a phenomenon called “pop out.” A human subject presented with an image of, say, one square and one star will attend to both objects about equally. But a human subject presented an image of one square and a dozen stars will tend to focus on the square.

Like a human asked to perform a visual-perception task, the software can adjust its object and location models on the fly. If the software is asked to identify only the objects at a particular location in the image, it will cross off its list of possible objects any that don’t contain the features found at that location.

By the same token, if it’s asked to search the image for a particular kind of object, the interestingness of features not found in that object will go to zero, and the interestingness of features found in the object will increase proportionally. This is what allows the system to predict the eye movements of humans viewing a digital image, but it’s also the aspect of the system that could aid the design of computer object-recognition systems. A typical object-recognition system, when asked to search an image for multiple types of objects, will search through the entire image looking for features characteristic of the first object, then search through the entire image looking for features characteristic of the second object, and so on. A system like Poggio and Chikkerur’s, however, could limit successive searches to just those regions of the image that are likely to have features of interest.

Source: “What and where: A Bayesian inference theory of attention.” Sharat S. Chikkerur, Thomas Serre, Cheston Tan, Tomaso Poggio. Vision Research. Week of 7 June, 2010.

Funding: DARPA, the Honda Research Institute USA, NEC, Sony and the Eugene McDermott Foundation

Media Contact

More Information:

http://www.mit.eduAll latest news from the category: Interdisciplinary Research

News and developments from the field of interdisciplinary research.

Among other topics, you can find stimulating reports and articles related to microsystems, emotions research, futures research and stratospheric research.

Newest articles

High-energy-density aqueous battery based on halogen multi-electron transfer

Traditional non-aqueous lithium-ion batteries have a high energy density, but their safety is compromised due to the flammable organic electrolytes they utilize. Aqueous batteries use water as the solvent for…

First-ever combined heart pump and pig kidney transplant

…gives new hope to patient with terminal illness. Surgeons at NYU Langone Health performed the first-ever combined mechanical heart pump and gene-edited pig kidney transplant surgery in a 54-year-old woman…

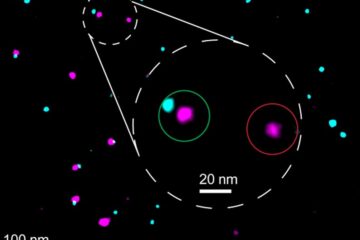

Biophysics: Testing how well biomarkers work

LMU researchers have developed a method to determine how reliably target proteins can be labeled using super-resolution fluorescence microscopy. Modern microscopy techniques make it possible to examine the inner workings…