Anticipation and Navigation: Do Your Legs Know What Your Tongue Is Doing?

To survive, animals must explore their world to find the necessities of life. It's a complex task, requiring them to form them a mental map of their environment to navigate the safest and fastest routes to food and water. They also learn to anticipate when and where certain important events, such as finding a meal, will occur.

Understanding the connection between these two fundamental behaviors, navigation and the anticipation of a reward, had long eluded scientists because it was not possible to simultaneously study both while an animal was moving.

In an effort to overcome this difficulty and to understand how the brain processes the environmental cues available to it and whether various regions of the brain cooperate in this task, scientists at UCLA created a multisensory virtual-reality environment through which rats could navigate on a trac ball in order to find a reward. This virtual world, which included both visual and auditory cues, gave the rats the illusion of actually moving through space and also allowed the scientists to manipulate the cues.

The results of their study, published in the current edition of the journal PLOS ONE, revealed something “fascinating,” said UCLA neurophysicist Mayank Mehta, the senior author of the research.

The scientists found that the rats, despite being nocturnal, preferred to navigate to a food reward using only visual cues — they ignored auditory cues. Further, with the visual cues, their legs worked in perfect harmony with their anticipation of food; they learned to efficiently navigate to the spot in the virtual environment where the reward would be offered, and as they approached and entered that area, their licking behavior — a sign of reward anticipation — increased significantly.

But take away the visual cues and give them only sounds to navigate, and the rats legs became “lost”; they showed no sign they could navigate directly to the reward and instead used a broader, more random circling strategy to eventually locate the food. Yet interestingly, as they neared the reward location, their tongues began to lick preferentially.

Thus, in the presence of the only auditory cues, the tongue seemed to know where to expect the reward, but the legs did not. This finding, teased out for the first time, suggests that different areas of a brain can work together, or be at odds.

“This is a fundamental and fascinating new insight about two of the most basic behaviors: walking and eating,” Mehta said. “The results could pave the way toward understanding the human brain mechanisms of learning, memory and reward consumption and treating such debilitating disorders as Alzheimer's disease or ADHD that diminish these abilities.”

Mehta, a professor of neurophysics with joint appointments in the departments of neurology, physics and astronomy, is fascinated with how our brains make maps of space and how we navigate in that space. In a recent study, he and his colleagues discovered how individual brain cells compute how much distance the subjects traveled.

This time, they wanted to understand how the brain processes the various environmental cues available to it. At a fundamental level, Mehta said, all animals, including humans, must know where they are in the world and how to find food and water in that environment. Which way is up, which way down, what is the safest or fastest path to their destination?

“Look at any animal's behavior,” he said, “and at a fundamental level, they learn to both anticipate and seek out certain rewards like food and water. But until now, these two worlds — of reward anticipation and navigation — have remained separate because scientists couldn't measure both at the same time when subjects are walking.”

Navigation requires the animal to form a spatial map of its environment so it can walk from point to point. An anticipation of a reward requires the animal to learn how to predict when it is going to get a reward and how to consume it — think Pavlov's famous experiments in which his dogs learned to salivate in anticipation of getting a food reward. Research into these forms of learning has so far been entirely separate because the technology was not there to study them simultaneously.

So Mehta and his colleagues, including co–first authors Jesse Cushman and Daniel Aharoni, developed a virtual-reality apparatus that allowed them to construct both visual and auditory virtual environments. As video of the environment was projected around them, the rats, held by a harness, were placed on a ball that rotated as they moved. The researchers then trained the rats on a very difficult task that required them to navigate to a specific location to get sugar water — a treat for rats — through a reward tube.

The visual images and sounds in the environment could each be turned on or off, and the researchers could measure the rats' anticipation of the reward by their preemptive licking in the area of the reward tube. In this way, the scientists were able for the first time to measure rodents' navigation in a nearly real-world space while also gauging their reward anticipation.

“Navigation and reward consuming are things all animals do all the time, even humans. Think about navigating to lunch,” Mehta said. “These two behaviors were always thought to be governed by two entirely different brain circuits, but this has never been tested before. That's because the simultaneous measurement of reward anticipation and navigation is really difficult to do in the real world but made possible in a virtual world.”

When the rat was in a “normal” virtual world, with both sound and sight, legs and tongue worked in harmony — the legs headed for the food reward while the tongue licked where the reward was supposed to be. This confirmed a long held expectation, that different behaviors are synchronized.

But the biggest surprise, said Mehta, was that when they measured a rat's licking pattern in just an auditory world — that is, one with no visual cues — the rodent's tongue showed a clear map of space, as if the tongue knew where the food was.

“They demonstrated this by licking more in the vicinity of the reward. But their legs showed no sign of where the reward was, as the rats kept walking randomly without stopping near the reward,” he said. “So for the first time, we showed how multisensory stimuli, such as lights and sounds, influence multimodal behavior, such as generating a mental map of space to navigate, and reward anticipation, in different ways. These are some of the most basic behaviors all animals engage in, but they had never been measured together.”

Previously, Mehta said, it was thought that all stimuli would influence all behaviors more or less similarly.

“But to our great surprise, the legs sometimes do not seem to know what the tongue is doing,” he said. “We see this as a fundamental and fascinating new insight about basic behaviors, walking and eating, and lends further insight toward understanding the brain mechanisms of learning and memory, and reward consumption.”

Other authors on the study included Bernard Willers, Pascal Ravassard, Ashley Kees, Cliff Vuong, Briana Popeney, Katsushi Arisaka, all of UCLA. Funding for the research was provided by the National Science Foundation Career award, and grants from: National Institutes of Health (5R01MH092925-02), and the W. M. Keck foundation to Mayank Mehta.

A video of the rat moving in the virtual world is available by request.

The UCLA Department of Neurology encompasses more than 26 disease-related research programs. This includes all of the major categories of neurological diseases and methods, encompassing neurogenetics and neuroimaging, as well as health services research. The 140 faculty members of the department are distinguished scientists and clinicians who have been ranked No. 1 in National Institutes of Health funding since 2002. The department is dedicated to understanding the human nervous system and improving the lives of people with neurological diseases, focusing on three key areas: patient/clinical care, research and education.

Media Contact

More Information:

http://www.ucla.eduAll latest news from the category: Interdisciplinary Research

News and developments from the field of interdisciplinary research.

Among other topics, you can find stimulating reports and articles related to microsystems, emotions research, futures research and stratospheric research.

Newest articles

A universal framework for spatial biology

SpatialData is a freely accessible tool to unify and integrate data from different omics technologies accounting for spatial information, which can provide holistic insights into health and disease. Biological processes…

How complex biological processes arise

A $20 million grant from the U.S. National Science Foundation (NSF) will support the establishment and operation of the National Synthesis Center for Emergence in the Molecular and Cellular Sciences (NCEMS) at…

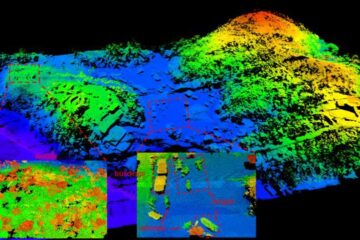

Airborne single-photon lidar system achieves high-resolution 3D imaging

Compact, low-power system opens doors for photon-efficient drone and satellite-based environmental monitoring and mapping. Researchers have developed a compact and lightweight single-photon airborne lidar system that can acquire high-resolution 3D…