Machine learning enhances light-beam performance at the advanced light source

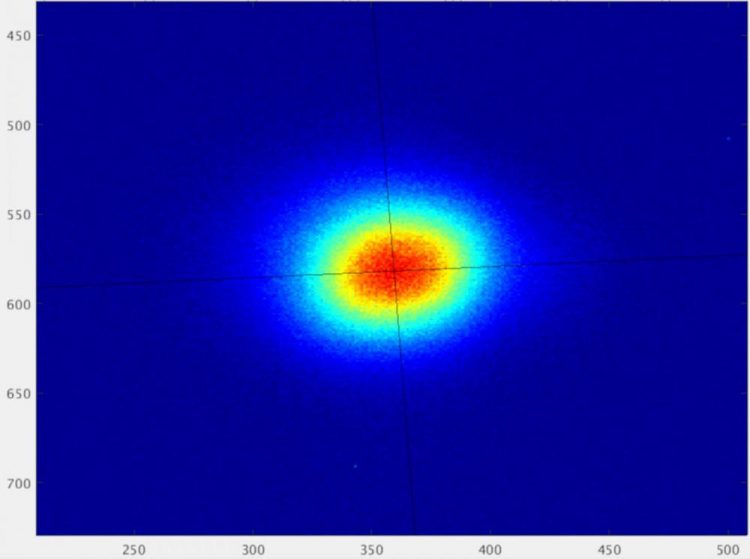

This image shows the profile of an electron beam at Berkeley Lab's Advanced Light Source synchrotron, represented as pixels measured by a charged coupled device (CCD) sensor. When stabilized by a machine-learning algorithm, the beam has a horizontal size dimension of 49 microns root mean squared and vertical size dimension of 48 microns root mean squared. Demanding experiments require that the corresponding light-beam size be stable on time scales ranging from less than seconds to hours to ensure reliable data. Credit: Lawrence Berkeley National Laboratory

Synchrotron light sources are powerful facilities that produce light in a variety of “colors,” or wavelengths – from the infrared to X-rays – by accelerating electrons to emit light in controlled beams.

Synchrotrons like the Advanced Light Source at the Department of Energy's Lawrence Berkeley National Laboratory (Berkeley Lab) allow scientists to explore samples in a variety of ways using this light, in fields ranging from materials science, biology, and chemistry to physics and environmental science.

Researchers have found ways to upgrade these machines to produce more intense, focused, and consistent light beams that enable new, and more complex and detailed studies across a broad range of sample types.

But some light-beam properties still exhibit fluctuations in performance that present challenges for certain experiments.

Addressing a decades-old problem

Many of these synchrotron facilities deliver different types of light for dozens of simultaneous experiments. And little tweaks to enhance light-beam properties at these individual beamlines can feed back into the overall light-beam performance across the entire facility. Synchrotron designers and operators have wrestled for decades with a variety of approaches to compensate for the most stubborn of these fluctuations.

And now, a large team of researchers at Berkeley Lab and UC Berkeley has successfully demonstrated how machine-learning tools can improve the stability of the light beams' size for experiments via adjustments that largely cancel out these fluctuations – reducing them from a level of a few percent down to 0.4 percent, with submicron (below 1 millionth of a meter) precision.

The tools are detailed in a study published Nov. 6 in the journal Physical Review Letters.

Machine learning is a form of artificial intelligence in which computer systems analyze a set of data to build predictive programs that solve complex problems. The machine-learning algorithms used at the ALS are referred to as a form of “neural network” because they are designed to recognize patterns in the data in a way that loosely resembles human brain functions.

In this study, researchers fed electron-beam data from the ALS, which included the positions of the magnetic devices used to produce light from the electron beam, into the neural network. The neural network recognized patterns in this data and identified how different device parameters affected the width of the electron beam. The machine-learning algorithm also recommended adjustments to the magnets to optimize the electron beam.

Because the size of the electron beam mirrors the resulting light beam produced by the magnets, the algorithm also optimized the light beam that is used to study material properties at the ALS.

Solution could have global impact

The successful demonstration at the ALS shows how the technique could also generally be applied to other light sources, and will be especially beneficial for specialized studies enabled by an upgrade of the ALS known as the ALS-U project.

“That's the beauty of this,” said Hiroshi Nishimura, a Berkeley Lab affiliate who retired last year and had engaged in early discussions and explorations of a machine-learning solution to the longstanding light-beam size-stability problem. “Whatever the accelerator is, and whatever the conventional solution is, this solution can be on top of that.”

Steve Kevan, ALS director, said, “This is a very important advance for the ALS and ALS-U. For several years we've had trouble with artifacts in the images from our X-ray microscopes. This study presents a new feed-forward approach based on machine learning, and it has largely solved the problem.”

The ALS-U project will increase the narrow focus of light beams from a level of around 100 microns down to below 10 microns and also create a higher demand for consistent, reliable light-beam properties.

The machine-learning technique builds upon conventional solutions that have been improved over the decades since the ALS started up in 1993, and which rely on constant adjustments to magnets along the ALS ring that compensate in real time for adjustments at individual beamlines.

Nishimura, who had been a part of the team that brought the ALS online more than 25 years ago, said he began to study the potential application of machine-learning tools for accelerator applications about four or five years ago. His conversations extended to experts in computing and accelerators at Berkeley Lab and at UC Berkeley, and the concept began to gel about two years ago.

Successful testing during ALS operations

Researchers successfully tested the algorithm at two different sites around the ALS ring earlier this year. They alerted ALS users conducting experiments about the testing of the new algorithm, and asked them to give feedback on any unexpected performance issues.

“We had consistent tests in user operations from April to June this year,” said C. Nathan Melton, a postdoctoral fellow at the ALS who joined the machine-learning team in 2018 and worked closely with Shuai Liu, a former UC Berkeley graduate student who contributed considerably to the effort and is a co-author of the study.

Simon Leemann, deputy for Accelerator Operations and Development at the ALS and the principal investigator in the machine-learning effort, said, “We didn't have any negative feedback to the testing. One of the monitoring beamlines the team used is a diagnostic beamline that constantly measures accelerator performance, and another was a beamline where experiments were actively running.” Alex Hexemer, a senior scientist at the ALS and program lead for computing, served as the co-lead in developing the new tool.

The beamline with the active experiments, Beamline 5.3.2.2, uses a technique known as scanning transmission X-ray microscopy or STXM, and scientists there reported improved light-beam performance in experiments.

The machine-learning team noted that the enhanced light-beam performance is also well-suited for advanced X-ray techniques such as ptychography, which can resolve the structure of samples down to the level of nanometers (billionths of a meter); and X-ray photon correlation spectroscopy, or XPCS, which is useful for studying rapid changes in highly concentrated materials that don't have a uniform structure.

Other experiments that demand a reliable, highly focused light beam of constant intensity where it interacts with the sample can also benefit from the machine-learning enhancement, Leemann noted.

“Experiments' requirements are getting tougher, with smaller-area scans on samples,” he said. “We have to find new ways for correcting these imperfections.”

He noted that the core problem that the light-source community has wrestled with – and that the machine-learning tools address – is the fluctuating vertical electron beam size at the source point of the beamline.

The source point is the point where the electron beam at the light source emits the light that travels to a specific beamline's experiment. While the electron beam's width at this point is naturally stable, its height (or vertical source size) can fluctuate.

Opening the 'black box' of artificial intelligence

“This is a very nice example of team science,” Leemann said, noting that the effort overcame some initial skepticism about the viability of machine learning for enhancing accelerator performance, and opened up the “black box” of how such tools can produce real benefits.

“This is not a tool that has traditionally been a part of the accelerator community. We managed to bring people from two different communities together to fix a really tough problem.” About 15 Berkeley Lab researchers participated in the effort.

“Machine learning fundamentally requires two things: The problem needs to be reproducible, and you need huge amounts of data,” Leemann said. “We realized we could put all of our data to use and have an algorithm recognize patterns.”

The data showed the little blips in electron-beam performance as adjustments were made at individual beamlines, and the algorithm found a way to tune the electron beam so that it negated this impact better than conventional methods could.

“The problem consists of roughly 35 parameters – way too complex for us to figure out ourselves,” Leemann said. “What the neural network did once it was trained – it gave us a prediction for what would happen for the source size in the machine if it did nothing at all to correct it.

“There is an additional parameter in this model that describes how the changes we make in a certain type of magnet affects that source size. So all we then have to do is choose the parameter that – according to this neural-network prediction – results in the beam size we want to create and apply that to the machine,” Leemann added.

The algorithm-directed system can now make corrections at a rate of up to 10 times per second, though three times a second appears to be adequate for improving performance at this stage, Leemann said.

The search for new machine-learning applications

The machine-learning team received two years of funding from the U.S. Department of Energy in August 2018 to pursue this and other machine-learning projects in collaboration with the Stanford Synchrotron Radiation Lightsource at SLAC National Accelerator Laboratory. “We have plans to keep developing this and we also have a couple of new machine-learning ideas we'd like to try out,” Leemann said.

Nishimura said that the buzzwords “artificial intelligence” seem to have trended in and out of the research community for many years, though, “This time it finally seems to be something real.”

###

The Advanced Light Source and Stanford Synchrotron Radiation Lightsource are DOE Office of Science User Facilities. This work involved researchers in Berkeley Lab's Computational Research Division and was supported by the Department of Energy's Basic Energy Sciences and Advanced Scientific Computing Research programs.

Founded in 1931 on the belief that the biggest scientific challenges are best addressed by teams, Lawrence Berkeley National Laboratory and its scientists have been recognized with 13 Nobel Prizes. Today, Berkeley Lab researchers develop sustainable energy and environmental solutions, create useful new materials, advance the frontiers of computing, and probe the mysteries of life, matter, and the universe. Scientists from around the world rely on the Lab's facilities for their own discovery science. Berkeley Lab is a multiprogram national laboratory, managed by the University of California for the U.S. Department of Energy's Office of Science.

DOE's Office of Science is the single largest supporter of basic research in the physical sciences in the United States, and is working to address some of the most pressing challenges of our time. For more information, please visit energy.gov/science.

Media Contact

All latest news from the category: Physics and Astronomy

This area deals with the fundamental laws and building blocks of nature and how they interact, the properties and the behavior of matter, and research into space and time and their structures.

innovations-report provides in-depth reports and articles on subjects such as astrophysics, laser technologies, nuclear, quantum, particle and solid-state physics, nanotechnologies, planetary research and findings (Mars, Venus) and developments related to the Hubble Telescope.

Newest articles

Making diamonds at ambient pressure

Scientists develop novel liquid metal alloy system to synthesize diamond under moderate conditions. Did you know that 99% of synthetic diamonds are currently produced using high-pressure and high-temperature (HPHT) methods?[2]…

Eruption of mega-magnetic star lights up nearby galaxy

Thanks to ESA satellites, an international team including UNIGE researchers has detected a giant eruption coming from a magnetar, an extremely magnetic neutron star. While ESA’s satellite INTEGRAL was observing…

Solving the riddle of the sphingolipids in coronary artery disease

Weill Cornell Medicine investigators have uncovered a way to unleash in blood vessels the protective effects of a type of fat-related molecule known as a sphingolipid, suggesting a promising new…