Putting Japanese Technology at the Top of the World With Parallelization of Next Generation Multicore Processors

Belief in the importance of software.

Just over 25 years ago, when I was still in graduate school, I started getting involved in research of simultaneous design for computer hardware and software. At the time, computer development was centered around hardware with little importance placed on software. The dominant way of thinking in Japan was that if hardware was produced, software would naturally follow. But I thought this was the wrong line of thinking.

There is a limit as to what software developed after hardware can do, and in order to maximize system performance, software must be developed simultaneously. In the future, with miniaturizing and high integration of semi-conductors, I firmly felt that it was necessary to thoroughly look into calculation performance of hardware and software together. That is why I devised the design for the unique parallelization computer architecture OSCAR (Optimally Scheduled Advanced Multiprocessor), and began researching technology for multiprocessors to operate multiple CPUs and software parallelization.

The basis of my research is software technology called a compiler. A compiler breaks down a job assigned to one computer and automatically divides the work and plans the procedure of which parts of the job are to run concurrently, and which parts commence on completion of another.

In 1986, with the support of his professor from university days, Professor Seinosuke Narita, he developed the first OSCAR multiprocessor computer in joint research with Fuji Electric and Fuji Facom Corporation. He conducted research and development in parallel processing of iron manufacturing roll process management and simulation and robot control with a new multiprocessor architecture. It was an unprecedented, large-scale joint industrial-academic, leading-edge technology project for that time.

For example, a job divided among 5 people will not necessarily be completed in a fifth of the time it takes one to complete. Unless it is an extremely simple task, efficiency depends greatly on how you divide the work as tasks become more complicated. When proceeding from step one to step two, if one person lags behind, everyone must wait and can not progress to the next stage, so it becomes inefficient. The same goes for the computer world. How can we manage, to full effect, five computers, and how do we efficiently distribute the jobs? That is the role of the parallelization compiler, and will be very important for computers in the future.

Up until now, research in compilers has made progress in specialized fields such as science and technology calculations in supercomputers, but only very recently, multicore processors have started to widely appear in people’s everyday life and work machinery like personal computers and information gadgets, mobile phones, car navigation systems and game consoles etc. Due to this, automatic parallelization processing in compiler research has been put under the spotlight. Only now, are people in the personal computer and information appliance sector beginning to say out loud, “Parallelization is key technology!” and “Software is a must!” but I have been conducting steady research in this since the days when everybody thought otherwise.

In 1987, the year after I became a fulltime lecturer at Waseda University, I was awarded the Young Author Prize for my research on parallel processing of robot control and simulationin the International Federation of Automatic Control (IFAC) triennial world congress held in Munich, a conference hosting thousands of participants. In pre-contest screening, I was formally selected as one of thirty finalists whose regular papers were chosen in prejudging to give a presentation for final judging. It was a genuine competition where the sole winner was decided upon from total marks for research content, presentation, and response and attitude to questioning. That year saw the inception of the Young Author Prize, and to be successful in the inaugural competition, even just having my paper selected and judged at an international level at such an honorable conference, led to extra confidence and motivation as a researcher.

Showing overwhelming performance ratio to the world

The OSCAR compilers has suceeded improving the performance of private enterprise giants such as Intel and IBM by 2-3 times, giving them the largest performance in the world.

Since entering the 2000s, national projects for parallelization compilers and information appliance multicore processor development reached a peak. As part of the Ministry of Economy, Trade and Industry (METI), NEDO (New Energy and Industrial Technology Development Organization), and the Cabinet Office’s Millenium Project, I have conducted Advanced Parallelizing Compiler research and development with Hitachi and Fujitsu from 2000, heterogeneous multiprocessor basic research with Hitachi from 2004, and since 2005, have reached the point of developing 4 core (RP1) and 8 core (RP2), integrated multicore processor chips in tandem with Hitachi and Renesas Technology.

And in 2009, with Hitachi and Renesas Technology, we developed the heterogeneous multicore processor, an advanced microchip which can store different types of processors, and presented our findings at the “semiconductor Olympics,” the ISSCC international conference, on February 8, 2010. Also from 2005, through a real-time information appliance multicore project for NEDO, we developed, along with 6 companies (Hitachi, Fujitsu, Renesas Technology, Toshiba, NEC and Panasonic), the standard software OSCAR API, an OSCAR parallelization compiler that is compatible with the multicore processors of each company, and publicized it on our laboratory’s homepage.

We are now in an age where it is possible to store 100 million to 1 billion transistors in order on a single chip. Anybody can integrate multiple processors as a calculation device on their hardware. The problem is how to organize them to use this highly integrated chip to full performance. We have become world leaders in processor and software technology, so if we can create a market strategy with other companies, and expand the added value of big business into the information appliance world, I believe this could become the source of a competitive edge internationally for industry.

The task at hand is to rapidly transfer the high basic compiler technology of our laboratory, to the competitive industrial technology of the companies. This project has 3 specific goals. 1: be several times more cost-efficient than our overseas competitors, 2: make simple hardware and software designs to be able to respond to the fast lifecycle of information appliances, and 3: devise thorough energy consumption reduction plans to enable storage on smaller products.

In cost-efficiency terms, in comparison to compilers used by Intel and IBM, OSCAR compilers have been successful in operating at speeds two to three times faster. These unbelievable figures, which have been recognized by both ourselves and others, put Japan at the top of the world in multicore compiler technology.

For design simplification, we decided on a standard specification called OSCAR API (Application Programming Interface), and produced a standard software program which automatically converts OSCAR compiler parallelization results into each company’s machine language. In the past, it would not be surprising for the parallelization of a program to take several months, but with this new software, parallelization using OSCAR compilers is completed in a few minutes, dramatically reducing the time required to develop new software.

In regards to the third aim, energy consumption reduction, current desktop computers and servers consume 70 to 200 watts of power, and if we tried to store the same amount of power in a mobile phone, you would burn your ear. In the past, we have been able to cool processor chips with air cooling fans, but recently it has become necessary to use water cooling systems. This is where I set the aim to develop, and have been developing, a program which requires less than 3 watts of power, which can cool down naturally, negating the need for a built-in cooling system.

Comparative energy consumption reduction control experiment. When images are displayed on the chip, only 1.5 watts of energy is used by the software when energy reducing tools are in place, compared to 5.7 watts when untouched, resulting in a great difference in energy consumption.

Numerous creative and unique technologies

When it comes to computational performance and energy consumption of our computers, we produce world-leading numbers, and possess numerous creative technologies unknown to anyone else.

First, is technology called “memory optimization.” Even if a processor’s computational performance is fast, the exchange of data to the memory which stores the data is slow. High-speed memory is expensive, and excessive amounts of data can not be stored on the limited space of the chips. Here we have developed a practical technology which places frequently used data in a small high-speed memory system located near the processor and uses it repeatedly. With this, we have realized the world’s only dual high-speed technology of quadruple speed parallelization and quadruple speed memory optimization.

The new innovative research base, “Green Computing System Research and Development Center,” planned for opening in 2011. (envisaged aerial view).

We have also developed the world’s only “multigrain parallelization” technology for compilers. Standard parallelization management involves repeating the same controls over and over in units called loops in order to parallelize jobs. This is called loop parallelization, but we have reached the point where we can no longer increase performance using this method. Current personal computers and supercomputers utilize this method. To solve this, we have made it possible to produce high speeds using a system called coarse-grain task parallelization management, where units of larger jobs, and not loops, are parallelized.

And finally, we have realized the previously mentioned ultra-energy consumption reductions with the world’s only software and hardware cooperative energy management technology. First of all, regardless of what jobs have been assigned, after a processor’s power source is turned on, when it is idle it leaks electrical currents, and thus consumes energy. Using our technology, a processor can automatically switch off its own power. Secondly, if the voltage arising from a processor’s management speed (operation frequency) is lowered, energy consumption can be reduced by the third power. We have developed the technology for processors to move in ample time, and halt unnecessary high-speed controls. Through these minute speed controls, we have produced dual results in high efficiency with “high-speed productions” and “energy consumption reductions.”

With IEEE Computer Society President, Dr. Susan K. Land (second from left) when she visited the laboratory. (March 2009)

In 2011, with backing from METI, this university is planning to open a new innovative research base facility called “Green Computing System Research and Development Center,” a joint industry, government and academic project of research and development in multicore processors, which could be called the next generation “many core.” We are continuing to look into research where we can give hardware and software easy “many core” by using environmentally friendly solar batteries, and integrate 64 core and 128 core chips into more processors, in which practical applications can include medical image management, global warming, typhoon and environmental simulations, automobiles, robots, aircraft, and information appliance planning etc.

In the ever progressing world of high integration and ultra-miniaturization which is beyond human imagination, I want to make computers which are “small, quiet, energy efficient and fast.” I am undertaking research with this basic and simple idea in mind.

Hironori Kasahara

Professor at Waseda University Faculty of Science and Engineering. Director of Advanced Multicore Processor Research Institute

Completed doctorate at Waseda University School of Science and Engineering in 1985. Doctor of engineering. After becoming the Japan Society for the Promotion of Science’s first research fellow and a visiting researcher at the University of California’s Berkeley College,he became a fulltime assistant professor in 1986, associate professor in 1988 and professor of the School of Science and Engineering in 1997 and rose to his current position. Visiting researcher at the University of Illinois Center for Supercomputing R&D in 1989-90. A member of Board of Governors of IEEE Computer Society in 2009. Among his many awards, he has received the IFAC World Congress Young Author Prize, the IPSJ Sakai Special Research Award, the Grand Prix runner-up prize at the 2008 LSI of the Year, and Best Research Award at the Intel Asia Academic Forum.

Source: Research SEA yyyy/mm/dd

Media Contact

All latest news from the category: Information Technology

Here you can find a summary of innovations in the fields of information and data processing and up-to-date developments on IT equipment and hardware.

This area covers topics such as IT services, IT architectures, IT management and telecommunications.

Newest articles

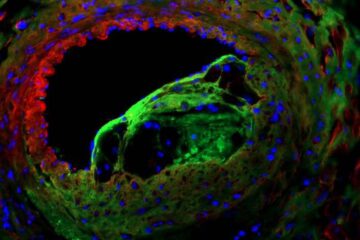

Solving the riddle of the sphingolipids in coronary artery disease

Weill Cornell Medicine investigators have uncovered a way to unleash in blood vessels the protective effects of a type of fat-related molecule known as a sphingolipid, suggesting a promising new…

Rocks with the oldest evidence yet of Earth’s magnetic field

The 3.7 billion-year-old rocks may extend the magnetic field’s age by 200 million years. Geologists at MIT and Oxford University have uncovered ancient rocks in Greenland that bear the oldest…

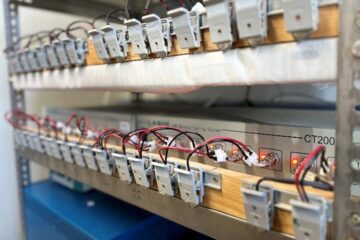

Decisive breakthrough for battery production

Storing and utilising energy with innovative sulphur-based cathodes. HU research team develops foundations for sustainable battery technology Electric vehicles and portable electronic devices such as laptops and mobile phones are…