Robotic minds think alike?

Led by Linköping University in Sweden, the researchers in the COSPAL project adopted an innovative approach to making robots recognise, indentify and interact with objects, particularly in random, unforeseen situations.

Traditional robotics relies on having the robots carry out complex calculations, such as measuring the geometry of an object and its expected trajectory if moved. But COSPAL has turned this around, making the robots perform tasks based on their own experiences and observations of humans. This trial and error approach could lead to more autonomous robots and even improve our understanding of the human brain.

“Gösta Granlund, head of the Computer Vision Laboratory at Linköping University, came up with the concept that action precedes perception in learning. That may sound counterintuitive, but it is exactly how humans learn,” explains Michael Felsberg, coordinator of the EU-funded COSPAL.

Children, he notes, are “always testing and trying everything” and by performing random actions – poking this object or touching that one – they come to understand cause and effect and can apply that knowledge in the future. By experimenting, they quickly find out, for example, that a ball rolls and that a hole cannot be grasped. Children also learn from observing adults and copying their actions, gaining greater understanding of the world around them.

Learning like, and from, humans

Applied in the context of an artificial cognitive system (ACS), the approach helps to create robots that learn much as humans do and can learn from humans, allowing them to continue to perform tasks even when their environment changes or when objects they are not pre-programmed to recognise are placed in front of them.

“Most artificial intelligence-based ACS architectures are quite successful in recognising objects based on geometric calculations of visual inputs. Some people argue that humans also perform such calculations to identify something, but I don’t think so. I think humans are just very good at recognising the geometry of objects from experience,” Felsberg says.

The COSPAL team’s ACS would seem to bear that theory out. A robot with no pre-programmed geometric knowledge was able to recognise objects simply from experience, even when its surroundings and the position of the camera through which it obtained its visual information changed.

Getting the right peg in the right hole

A shape-sorting puzzle of the sort used to teach small children was used to test the system. Through trial and error and observation, the robot was able to place cubes in square holes and round pegs in round holes with an accuracy of 2mm and 2 degrees. “It showed that, without knowing geometry, it can solve geometric problems,” Felsberg notes.

“In fact, I observed my 11-month-old son solving the same puzzle and the learning process you could see unfolding with both him and the robot was remarkably similar.”

Another test of the robot’s ability to learn from observation involved the use of a robotic arm that copied the movement of a human arm. With as few as 20 to 60 observations, the robotic arm was able to trace the movement of the human arm through a constrained space, avoiding obstacles on the way. In subsequent trials with the same robot, the learning period was greatly reduced, suggesting that the ACS was indeed drawing on memories of past observations.

In addition, by applying concepts akin to fuzzy logic, the team came up with a new means of making the robot identify corresponding signals and symbols such as colours. Instead of specifying three numbers to represent a red, green and blue component, as used in most digital image processing applications, the team made the system learn colours from pairs of images and corresponding sets of reference colour names, such as red, dark red, blue and dark blue in a representation known as channel coding. Similar to how colours are identified by the human brain with sets of neurons firing selectively to differentiate green from black, for example, channel coding offers a biologically inspired way of representing information.

“As humans, we can use reason to deduce what an object is by a process of elimination, i.e. we know that if something has such and such a property it must be this item, not that one. Though this type of machine reasoning has been used before, we have developed an advanced version for object recognition that uses symbolic and visual information to great effect,” Felsberg says.

Media Contact

All latest news from the category: Information Technology

Here you can find a summary of innovations in the fields of information and data processing and up-to-date developments on IT equipment and hardware.

This area covers topics such as IT services, IT architectures, IT management and telecommunications.

Newest articles

A universal framework for spatial biology

SpatialData is a freely accessible tool to unify and integrate data from different omics technologies accounting for spatial information, which can provide holistic insights into health and disease. Biological processes…

How complex biological processes arise

A $20 million grant from the U.S. National Science Foundation (NSF) will support the establishment and operation of the National Synthesis Center for Emergence in the Molecular and Cellular Sciences (NCEMS) at…

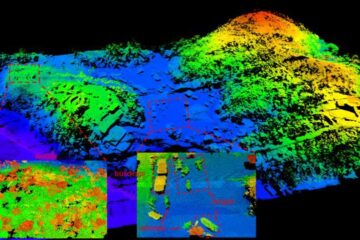

Airborne single-photon lidar system achieves high-resolution 3D imaging

Compact, low-power system opens doors for photon-efficient drone and satellite-based environmental monitoring and mapping. Researchers have developed a compact and lightweight single-photon airborne lidar system that can acquire high-resolution 3D…