New Supercomputer Rankings for "Big Data" Machines Released

New rankings were released Tuesday in Seattle at SC2011, the international conference for high-performance computing.

NNSA/SC Blue Gene/Q Prototype II has risen to the top spot on the list and is the first National Nuclear Security Administration winner. Sandia’s Ultraviolet platform placed 10th using custom software, the Sandia Red Sky supercomputer dropped from 8th to 13th, and Dingus and Wingus, the insouciantly named Sandia prototype (Convey-based Field-Programmable Gate Array, or FPGA) platforms, placed 23rd and 24th, respectively.

The Graph500 stresses supercomputer performance on “big data” scaling problems rather than on the purely arithmetic computations measured by the Linpack Top500 and similar benchmarks. Graph500 machines are tested for their ability to solve complex problems involving random-appearing graphs, rather than simply for their speed in solving complex problems.

Such graph-based problems are found in the medical world, where large numbers of medical entries must be correlated; in the analysis of social networks, with their enormous numbers of electronically related participants; and in international security, where, for example, huge numbers of containers on ships roaming the world’s ports of call must be tracked.

“Companies are interested in doing well on the Graph500 because large-scale data analytics are an increasingly important problem area and could eclipse traditional high-performance computing (HPC) in overall importance to society,” said Murphy, whose committee receives input from 30 international researchers. Changes are implemented by Sandia, the Georgia Institute of Technology, the University of Illinois at Urbana-Champaign, Indiana University and others.

Big-data problems are solved by creating large, complex graphs with vertices that represent the data points — say, people on Facebook — and edges that represent relations between the data points — say, friends on Facebook. These problems stress the ability of computing systems to store and communicate large amounts of data in irregular, fast-changing communication patterns, rather than the ability to perform many arithmetic operations in succession. The Graph500 benchmarks indicate how well supercomputers handle such complex problems.

The complete list of rankings is available at http://www.graph500.org/nov2011.html

Media Contact

More Information:

http://www.sandia.govAll latest news from the category: Information Technology

Here you can find a summary of innovations in the fields of information and data processing and up-to-date developments on IT equipment and hardware.

This area covers topics such as IT services, IT architectures, IT management and telecommunications.

Newest articles

Microscopic basis of a new form of quantum magnetism

Not all magnets are the same. When we think of magnetism, we often think of magnets that stick to a refrigerator’s door. For these types of magnets, the electronic interactions…

An epigenome editing toolkit to dissect the mechanisms of gene regulation

A study from the Hackett group at EMBL Rome led to the development of a powerful epigenetic editing technology, which unlocks the ability to precisely program chromatin modifications. Understanding how…

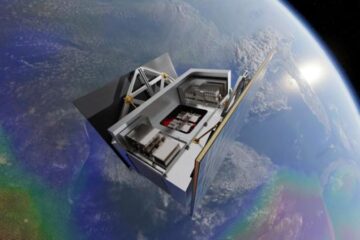

NASA selects UF mission to better track the Earth’s water and ice

NASA has selected a team of University of Florida aerospace engineers to pursue a groundbreaking $12 million mission aimed at improving the way we track changes in Earth’s structures, such…