Good ratings gone bad: study shows recommender systems can manipulate users’ opinions

Study also reports users lose trust in systems that give phony ratings

Online “recommender systems” are used to suggest highly rated selections for book buyers, movie renters or other consumers, but a new study by University of Minnesota computer science researchers shows for the first time that a system that lies about ratings can manipulate users’ opinions. Over time, however, users lose trust in unscrupulous systems.

The Minnesota research group, led by professors Joseph Konstan and John Riedl, conducted experiments with MovieLens, a member driven movie recommendation Web site, which the Minnesota team created to put their research on recommender systems into practice. The results of their latest studies will be presented at the CHI 2003 Conference on Human Factors in Computing Systems, April 5-10, 2003, in Fort Lauderdale, Fla. The work is supported by the National Science Foundation, the independent federal agency that supports basic research in all fields of science and engineering.

The results suggest that “shills,” who flood recommender systems with artificially high (or low) ratings, can have an impact and that recommender systems will take longer than previously expected to self-correct. The idea behind self-correction is that misled users will come back and give the item in question a more accurate rating.

“This study isn’t about defending against shill attacks, but about trust in the system,” Konstan said. “Our results show that individual shilling will take longer to correct, but the larger impact is the loss of trust in the system.” In the Minnesota study, users were sensitive to the quality of the predictions and expressed dissatisfaction when the system gave inaccurate ratings.

Taken together, the experiments provide several tips for the designers of interfaces to recommender systems. Interfaces should allow users to concentrate on rating while ignoring predictions. Finer-grained rating scales are preferred over simple “thumbs up, thumbs down” scales, but are not essential to good predictions. Finally, because users are sensitive to manipulation and inaccurate predictions, to keep customers happy, no recommender system at all is better than a bad recommender system.

Many online shopping sites use recommender systems to help consumers cope with information overload and steer consumers toward products that might interest them. Recommender systems based on “collaborative filtering” generate recommendations based on a user’s selections or ratings and the ratings of other users with similar tastes.

The CHI 2003 paper reports the results of three experiments that attempted to shed light on questions such as “Can the system make a user rate a ’bad’ movie ’good’?” “Do users notice when predictions are manipulated?” “Are users consistent in their ratings?” and “How do different rating scales affect users’ ratings?”

“To our knowledge, no one has studied how recommendations affect users’ opinions,” Konstan said. “We thought it was a socially important question because many of these systems are used in commercial applications. We found that if the system lies you can gain some influence in the short term, but you lose trust in the long term. This is a lot like trust between humans.”

In the first experiment, users re-rated movies while presented with some “predictions” that were different from the user’s prior rating of the movie. In the second experiment, users rated movies they hadn’t rated before. Some users saw “predictions” that were skewed higher or lower, while a control group saw only the system’s accurate predictions. The final experiment had users re-rate movies using three different rating scales and asked users their opinions of the different scales. In the re-rating experiment, users were generally consistent, but they did tend to change their rating in the direction of the system’s skewed “prediction.” In fact, users could even be influenced to change a rating from negative to positive or vice versa. When users rated previously unrated movies, they tended to rate toward the system’s prediction, whether it was skewed or not.

Konstan also noted that, while this study used movie ratings, recommender systems have much wider application. Such systems can also be used for identifying highly rated research papers from a given field or for knowledge management in large enterprises. New employees, for example, can bring themselves up to speed by focusing on highly rated news items or background materials.

Media Contact

All latest news from the category: Communications Media

Engineering and research-driven innovations in the field of communications are addressed here, in addition to business developments in the field of media-wide communications.

innovations-report offers informative reports and articles related to interactive media, media management, digital television, E-business, online advertising and information and communications technologies.

Newest articles

Superradiant atoms could push the boundaries of how precisely time can be measured

Superradiant atoms can help us measure time more precisely than ever. In a new study, researchers from the University of Copenhagen present a new method for measuring the time interval,…

Ion thermoelectric conversion devices for near room temperature

The electrode sheet of the thermoelectric device consists of ionic hydrogel, which is sandwiched between the electrodes to form, and the Prussian blue on the electrode undergoes a redox reaction…

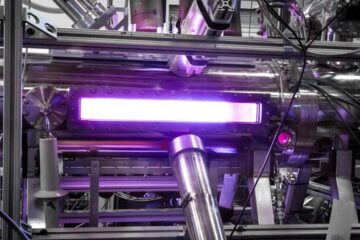

Zap Energy achieves 37-million-degree temperatures in a compact device

New publication reports record electron temperatures for a small-scale, sheared-flow-stabilized Z-pinch fusion device. In the nine decades since humans first produced fusion reactions, only a few fusion technologies have demonstrated…