Researchers Develop Optimal Algorithm for Determining Focus Error in Eyes and Cameras

Like a camera, the human eye has an auto-focusing system, but human auto-focusing rarely makes mistakes. And unlike a camera, humans do not require trial and error to focus an object.

Johannes Burge, a postdoctoral fellow in the College of Liberal Arts’ Center for Perceptual Systems and co-author of the study, says it is significant that a statistical algorithm can now determine focus error, which indicates how much a lens needs to be refocused to make the image sharp, from a single image without trial and error.

“Our research on defocus estimation could deepen our understanding of human depth perception,” Burge says. “Our results could also improve auto-focusing in digital cameras. We used basic optical modeling and well-understood statistics to show that there is information lurking in images that cameras have yet to tap.”

The researchers’ algorithm can be applied to any blurry image to determine focus error. An estimate of focus error also makes it possible to determine how far objects are from the focus distance.

In the human eye, inevitable defects in the lens, such as astigmatism, can help the visual system (via the retina and brain) compute focus error; the defects enrich the pattern of “defocus blur,” the blur that is caused when a lens is focused at the wrong distance. Humans use defocus blur to both estimate depth and refocus their eyes. Many small animals use defocus as their primary depth cue.

“We are now one step closer to understanding how these feats are accomplished,” says Wilson Geisler, director of the Center for Perceptual Systems and coauthor of the study. “The pattern of blur introduced by focus errors, along with the statistical regularities of natural images, makes this possible.”

Burge and Geisler considered what happens to images as focus error increases: an increasing amount of detail is lost with larger errors. Then, they noted that even though the content of images varies considerably (e.g. faces, mountains, flowers), the pattern and amount of detail in images is remarkably constant. This constancy makes it possible to determine the amount of defocus and, in turn, to re-focus appropriately.

Their article, titled “Optimal defocus estimation in individual natural images,” will be published in the Proceedings of the National Academy of Sciences. The research was supported by a grant from the National Institutes of Health.

The Center for Perceptual Systems is an integrated program that overlaps several separate departments: Neuroscience, Psychology, Electrical and Computer Engineering, Neurobiology, Computer Science, and Speech and Communication.

Media Contact

More Information:

http://www.utexas.eduAll latest news from the category: Power and Electrical Engineering

This topic covers issues related to energy generation, conversion, transportation and consumption and how the industry is addressing the challenge of energy efficiency in general.

innovations-report provides in-depth and informative reports and articles on subjects ranging from wind energy, fuel cell technology, solar energy, geothermal energy, petroleum, gas, nuclear engineering, alternative energy and energy efficiency to fusion, hydrogen and superconductor technologies.

Newest articles

Silicon Carbide Innovation Alliance to drive industrial-scale semiconductor work

Known for its ability to withstand extreme environments and high voltages, silicon carbide (SiC) is a semiconducting material made up of silicon and carbon atoms arranged into crystals that is…

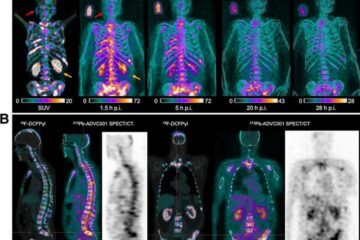

New SPECT/CT technique shows impressive biomarker identification

…offers increased access for prostate cancer patients. A novel SPECT/CT acquisition method can accurately detect radiopharmaceutical biodistribution in a convenient manner for prostate cancer patients, opening the door for more…

How 3D printers can give robots a soft touch

Soft skin coverings and touch sensors have emerged as a promising feature for robots that are both safer and more intuitive for human interaction, but they are expensive and difficult…