Neural network helps design brand new proteins

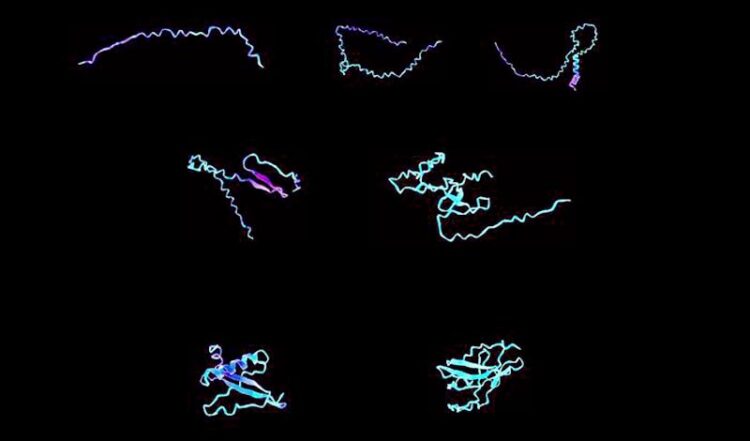

Sample visualizations of designer protein biomaterials, created using a transformer-graph neural network that can understand complex instructions and analyze and design materials from their ultimate building blocks.

Credit: Markus Buehler

A flexible, language-based approach proves surprisingly effective at solving intractable problems in materials science.

With their intricate arrangements and dynamic functionalities, proteins perform a plethora of biological tasks by employing unique arrangements of simple building blocks where geometry is key. Translating this nearly limitless library of arrangements into their respective functions could let researchers design custom proteins for specific uses.

In Journal of Applied Physics, from AIP Publishing, Markus Buehler of the Massachusetts Institute of Technology combined attention neural networks, often referred to as transformers, with graph neural networks to better understand and design proteins. The approach couples the strengths of geometric deep learning with those of language models not only to predict existing protein properties but also to envision new proteins that nature has not yet devised.

“With this new method, we can utilize all that nature has invented as a knowledge basis by modeling the underlying principles,” Buehler said. “The model recombines these natural building blocks to achieve new functions and solve these types of tasks.”

Owing to their complex structures, ability to multitask, and tendency to change shape when dissolved, proteins have been notoriously difficult to model. Machine learning has demonstrated the ability to translate the nanoscale forces governing protein behavior into working frameworks describing their function. However, going the other way — turning a desired function into a protein structure — remains a challenge.

To overcome this challenge, Buehler’s model turns numbers, descriptions, tasks, and other elements into symbols for his neural networks to use.

He first trained his model to predict the sequencing, solubility, and amino acid building blocks of different proteins from their functions. He then taught it to get creative and generate brand new structures after receiving initial parameters for a new protein’s function.

The approach allowed him to create solid versions of antimicrobial proteins that previously had to be dissolved in water. In another example, his team took a naturally occurring silk protein and evolved it into various new forms, including giving it a helix shape for more elasticity or a pleated structure for additional toughness.

The model performed many of the central tasks of designing new proteins, but Buehler said the approach can incorporate even more inputs for more tasks, potentially making it even more powerful.

“A big surprise element was that the model performed exceptionally well even though it was developed to be able to solve multiple tasks. This is likely because the model learns more by considering diverse tasks,” he said. “This change means that rather than creating specialized models for specific tasks, researchers can now think broadly in terms of multitask and multimodal models.”

The broad nature of this approach means this model can be applied to many areas outside protein design.

“While our current focus is proteins, this method has vast potential in materials science,” Buehler said. “We’re especially keen on exploring material failure behaviors, aiming to design materials with specific failure patterns.”

The article “Generative pretrained autoregressive transformer graph neural network applied to the analysis and discovery of novel proteins” is authored by Markus Buehler. It will appear in Journal of Applied Physics on Aug. 29, 2023 (DOI: 10.1063/5.0157367). After that date, it can be accessed at https://doi.org/10.1063/5.0157367.

ABOUT THE JOURNAL

The Journal of Applied Physics is an influential international journal publishing significant new experimental and theoretical results in all areas of applied physics. See https://aip.scitation.org/journal/jap.

Journal: Journal of Applied Physics

DOI: 10.1063/5.0157367

Article Title: Generative pretrained autoregressive transformer graph neural network applied to the analysis and discovery of novel proteins

Article Publication Date: 29-Aug-2023

Media Contact

Wendy Beatty

American Institute of Physics

media@aip.org

Office: 301.209.3090

All latest news from the category: Physics and Astronomy

This area deals with the fundamental laws and building blocks of nature and how they interact, the properties and the behavior of matter, and research into space and time and their structures.

innovations-report provides in-depth reports and articles on subjects such as astrophysics, laser technologies, nuclear, quantum, particle and solid-state physics, nanotechnologies, planetary research and findings (Mars, Venus) and developments related to the Hubble Telescope.

Newest articles

Peptides on Interstellar Ice

A research team led by Dr Serge Krasnokutski from the Astrophysics Laboratory at the Max Planck Institute for Astronomy at the University of Jena had already demonstrated that simple peptides…

A new look at the consequences of light pollution

GAME 2024 begins its experiments in eight countries. Can artificial light at night harm marine algae and impair their important functions for coastal ecosystems? This year’s project of the training…

Silicon Carbide Innovation Alliance to drive industrial-scale semiconductor work

Known for its ability to withstand extreme environments and high voltages, silicon carbide (SiC) is a semiconducting material made up of silicon and carbon atoms arranged into crystals that is…