Don’t always believe what you see, suggests study on false memories

People can easily be swayed into believing that they have seen something they never actually did see, say researchers at Ohio State University.

Participants in a study looked at a series of slides portraying geometric shapes. They were later shown a second set of test slides – two of the test slides contained images from the original group of slides, two contained images that were obviously not part of the original set, and one slide contained the lure image – a shape very similar to all of those shown in the original slide set, but one that wasn’t actually part of the original set.

Participants correctly identified the shapes they had seen in the original slides 80 percent of the time. But more often than not – nearly 60 percent of the time – the subjects said that they had indeed seen the lure image in the original group of shapes, even though it hadn’t been there.

“This suggests that visual false memories can be induced pretty easily,” said David Beversdorf, the study’s lead author and an assistant professor of neurology at Ohio State University. “While using context helps us to remember things, it can also throw us off.”

Researchers know a good deal about the false memory effect as it applies to language.

“People are susceptible to verbal false memories, whether it’s something that was actually said or an object they have a mental description of,” Beversdorf said. “We wanted to know if the ability to induce false memories extends beyond the language system – if it also affects the visual system, even when the images aren’t easily verbalized. It appears that the ability to create false memories does extend beyond language.”

He presented the findings on November 8 in New Orleans at the annual Society for Neuroscience conference. He conducted the research with Nicole Phillips, a recent graduate from Ohio State’s medical school, and Ashleigh Hillier, a postdoctoral research fellow with Ohio State’s department of neurology.

The study included 23 young adults with no history of mental impairment. Participants were shown 24 sets of 12 slides. Each set of slides portrayed different geometric shapes, which varied in number, size, position, shape and color.

After studying each group of slides, the participants were shown an additional five slides and asked if they had seen any of the shapes on these additional slides in the original set.

For example, participants were shown a set of 12 slides each showing yellow triangles. Each slide showed one, two or three large or small triangles; multiple triangles were arranged either vertically or horizontally. In this case, the lure slide – part of the five additional test slides – showed two small yellow triangles lined up horizontally below a large yellow triangle. But while it looked similar to slides in the original group, it wasn’t part of the initial set.

Participants accurately identified the images they had seen in the original set 80 percent of the time. They also correctly identified the images that obviously weren’t part of the original set of shapes more than 98 percent of the time. But they incorrectly said they had seen the lure images 60 percent of the time.

“False memories can be created using visual stimuli with minimal language input,” Beversdorf said. “The question now is whether this can be done entirely without the use of semantics.”

Beversdorf plans to apply these findings – and this research model – to future studies on autistic people.

In previous work, Beversdorf found that some people with autism spectrum disorder (ASD) performed better on a “false-memory” test than did normal control subjects. People with ASD have an impaired ability to use context, and, in a prior study, that inability improved the ASD subjects’ ability to discriminate between words that had and had not been on a word list.

“Language impairment is part of the syndrome of autism,” Beversdorf said. “We know that these people are better able to discriminate between words meant to trigger false memories and previously seen words, compared to normal control subjects. This model lets us explore those findings further, to see if we get similar results when objects are primarily visual.

“This work may give us insight into what autistic people can do well – to find the things that they are particularly good at in order to help harness their abilities.”

This study was funded by the National Institutes of Health’s National Institute of Neurological Disorders and Stroke.

Contact: David Beversdorf, (614) 293-8531;

Beversdorf-1@medctr.osu.edu

Written by Holly Wagner, (614) 292-8310; Wagner.235@osu.edu

Media Contact

All latest news from the category: Social Sciences

This area deals with the latest developments in the field of empirical and theoretical research as it relates to the structure and function of institutes and systems, their social interdependence and how such systems interact with individual behavior processes.

innovations-report offers informative reports and articles related to the social sciences field including demographic developments, family and career issues, geriatric research, conflict research, generational studies and criminology research.

Newest articles

Silicon Carbide Innovation Alliance to drive industrial-scale semiconductor work

Known for its ability to withstand extreme environments and high voltages, silicon carbide (SiC) is a semiconducting material made up of silicon and carbon atoms arranged into crystals that is…

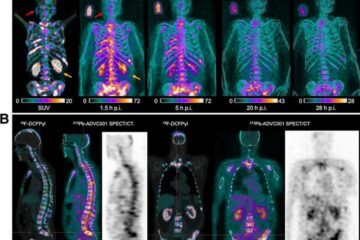

New SPECT/CT technique shows impressive biomarker identification

…offers increased access for prostate cancer patients. A novel SPECT/CT acquisition method can accurately detect radiopharmaceutical biodistribution in a convenient manner for prostate cancer patients, opening the door for more…

How 3D printers can give robots a soft touch

Soft skin coverings and touch sensors have emerged as a promising feature for robots that are both safer and more intuitive for human interaction, but they are expensive and difficult…