Searching for Dark Energy with the Whole World’s Supernova Dataset

The international Supernova Cosmology Project (SCP), based at the U.S. Department of Energy’s Lawrence Berkeley National Laboratory, has announced the Union2 compilation of hundreds of Type Ia supernovae, the largest collection ever of high-quality data from numerous surveys. Analysis of the new compilation significantly narrows the possible values that dark energy might take—but not enough to decide among fundamentally different theories of its nature.

“We’ve used the world’s best-yet dataset of Type Ia supernovae to determine the world’s best-yet constraints on dark energy,” says Saul Perlmutter, leader of the SCP. “We’ve tightened in on dark energy out to redshifts of one”—when the universe was only about six billion years old, less than half its present age—“but while at lower redshifts the values are perfectly consistent with a cosmological constant, the most important questions remain.”

That’s because possible values of dark energy from supernovae data become increasingly uncertain at redshifts greater than one-half, the range where dark energy’s effects on the expansion of the universe are most apparent as we look farther back in time. Says Perlmutter of the widening error bars at higher redshifts, “Right now, you could drive a truck through them.”

As its name implies, the cosmological constant fills space with constant pressure, counteracting the mutual gravitational attraction of all the matter in the universe; it is often identified with the energy of the vacuum. If indeed dark energy turns out to be the cosmological constant, however, even more questions will arise.

“There is a huge discrepancy between the theoretical prediction for vacuum energy and what we measure as dark energy,” says Rahman Amanullah, who led SCP’s Union2 analysis; Amanullah is presently with the Oskar Klein Center at Stockholm University and was a postdoctoral fellow in Berkeley Lab’s Physics Division from 2006 to 2008. “If it turns out in the future that dark energy is consistent with a cosmological constant also at early times of the universe, it will be an enormous challenge to explain this at a fundamental theoretical level.”

A major group of competing theories posit a dynamical form of dark energy that varies in time. Choosing among theories means comparing what they predict about the dark energy equation of state, a value written w. While the new analysis has detected no change in w, there is much room for possibly significant differences in w with increasing redshift (written z).

“Most dark-energy theories are not far from the cosmological constant at z less than one,” Perlmutter says. “We’re looking for deviations in w at high z, but there the values are very poorly constrained.”

In their new analysis to be published in the Astrophysical Journal, “Spectra and HST light curves of six Type Ia supernovae at 0.511

Dark energy fills the universe, but what is it?

Dark energy was discovered in the late 1990s by the Supernova Cosmology Project and the competing High-Z Supernova Search Team, both using distant Type Ia supernovae as “standard candles” to measure the expansion history of the universe. To their surprise, both teams found that expansion is not slowing due to gravity but accelerating.

Other methods for measuring the history of cosmic expansion have been developed, including baryon acoustic oscillation and weak gravitational lensing, but supernovae remain the most advanced technique. Indeed, in the years since dark energy was discovered using only a few dozen Type Ia supernovae, many new searches have been mounted with ground-based telescopes and the Hubble Space Telescope; many hundreds of Type Ia’s have been discovered; techniques for measuring and comparing them have continually improved.

In 2008 the SCP, led by the work of team member Marek Kowalski of the Humboldt University of Berlin, created a way to cross-correlate and analyze datasets from different surveys made with different instruments, resulting in the SCP’s first Union compilation. In 2009 a number of new surveys were added.

The inclusion of six new high-redshift supernovae found by the SCP in 2001, including two with z greater than one, is the first in a series of very high-redshift additions to the Union2 compilation now being announced, and brings the current number of supernovae in the whole compilation to 557.

“Even with the world’s premier astronomical observatories, obtaining good quality, time-critical data of supernovae that are beyond a redshift of one is a difficult task,” says SCP member Chris Lidman of the Anglo-Australian Observatory near Sydney, a major contributor to the analysis. “It requires close collaboration between astronomers who are spread over several continents and several time zones. Good team work is essential.”

Union2 has not only added many new supernovae to the Union compilation but has refined the methods of analysis and in some cases improved the observations. The latest high-z supernovae in Union2 include the most distant supernovae for which ground-based near-infrared observations are available, a valuable opportunity to compare ground-based and Hubble Space Telescope observations of very distant supernovae.

Type Ia supernovae are the best standard candles ever found for measuring cosmic distances because the great majority are so bright and so similar in brightness. Light-curve fitting is the basic method for standardizing what variations in brightness remain: supernova light curves (their rising and falling brightness over time) are compared and uniformly adjusted to yield comparative intrinsic brightness. The light curves of all the hundreds of supernova in the Union2 collection have been consistently reanalyzed.

The upshot of these efforts is improved handling of systematic errors and improved constraints on the value of the dark energy equation of state with increasing redshift, although with greater uncertainty at very high redshifts. When combined with data from cosmic microwave background and baryon oscillation surveys, the “best fit cosmology” remains the so-called Lambda Cold Dark Matter model, or ËCDM.

ËCDM has become the standard model of our universe, which began with a big bang, underwent a brief period of inflation, and has continued to expand, although at first retarded by the mutual gravitational attraction of matter. As matter spread and grew less dense, dark energy overcame gravity, and expansion has been accelerating ever since.

To learn just what dark energy is, however, will first require scientists to capture many more supernovae at high redshifts and thoroughly study their light curves and spectra. This can’t be done with telescopes on the ground or even by heavily subscribed space telescopes. Learning the nature of what makes up three-quarters of the density of our universe will require a dedicated observatory in space.

This work was supported in part by the U.S. Department of Energy’s Office of Science.

Berkeley Lab is a U.S. Department of Energy national laboratory located in Berkeley, California. It conducts unclassified scientific research for DOE’s Office of Science and is managed by the University of California. Visit our website at http://www.lbl.gov.

Media Contact

More Information:

http://www.lbl.govAll latest news from the category: Physics and Astronomy

This area deals with the fundamental laws and building blocks of nature and how they interact, the properties and the behavior of matter, and research into space and time and their structures.

innovations-report provides in-depth reports and articles on subjects such as astrophysics, laser technologies, nuclear, quantum, particle and solid-state physics, nanotechnologies, planetary research and findings (Mars, Venus) and developments related to the Hubble Telescope.

Newest articles

Silicon Carbide Innovation Alliance to drive industrial-scale semiconductor work

Known for its ability to withstand extreme environments and high voltages, silicon carbide (SiC) is a semiconducting material made up of silicon and carbon atoms arranged into crystals that is…

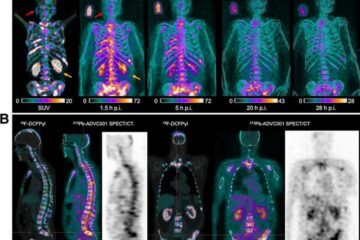

New SPECT/CT technique shows impressive biomarker identification

…offers increased access for prostate cancer patients. A novel SPECT/CT acquisition method can accurately detect radiopharmaceutical biodistribution in a convenient manner for prostate cancer patients, opening the door for more…

How 3D printers can give robots a soft touch

Soft skin coverings and touch sensors have emerged as a promising feature for robots that are both safer and more intuitive for human interaction, but they are expensive and difficult…