Quantum computers may be easier to build than predicted

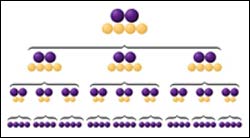

The new NIST architecture for quantum computing relies on several levels of error checking to ensure the accuracy of quantum bits (qubits). The image above illustrates how qubits are grouped in blocks to form the levels. To implement the architecture with three levels, a series of operations is performed on 36 qubits (bottom row)each one representing either a 1, a 0, or both at once. The operations on the nine sets of qubits produce two reliably accurate qubits (top row). The purple spheres represent qubits that are either used in error detection or in actual computations. The yellow spheres are qubits that are measured to detect or correct errors but are not used in final computations.

A full-scale quantum computer could produce reliable results even if its components performed no better than today’s best first-generation prototypes, according to a paper in the March 3 issue in the journal Nature* by a scientist at the Commerce Department’s National Institute of Standards and Technology (NIST).

In theory, such a quantum computer could be used to break commonly used encryption codes, to improve optimization of complex systems such as airline schedules, and to simulate other complex quantum systems.

A key issue for the reliability of future quantum computers–which would rely on the unusual properties of nature’s smallest particles to store and process data–is the fragility of quantum states. Today’s computers use millions of transistors that are switched on or off to reliably represent values of 1 or 0. Quantum computers would use atoms, for example, as quantum bits (qubits), whose magnetic and other properties would be manipulated to represent 1 or 0 or even both at the same time. These states are so delicate that qubit values would be unusually susceptible to errors caused by the slightest electronic “noise.”

To get around this problem, NIST scientist Emanuel Knill suggests using a pyramid-style hierarchy of qubits made of smaller and simpler building blocks than envisioned previously, and teleportation of data at key intervals to continuously double-check the accuracy of qubit values. Teleportation was demonstrated last year by NIST physicists, who transferred key properties of one atom to another atom without using a physical link.

“There has been a tremendous gap between theory and experiment in quantum computing,” Knill says. “It is as if we were designing today’s supercomputers in the era of vacuum tube computing, before the invention of transistors. This work reduces the gap, showing that building quantum computers may be easier than we thought. However, it will still take a lot of work to build a useful quantum computer.”

Use of Knill’s architecture could lead to reliable computing even if individual logic operations made errors as often as 3 percent of the time–performance levels already achieved in NIST laboratories with qubits based on ions (charged atoms). The proposed architecture could tolerate several hundred times more errors than scientists had generally thought acceptable.

Knill’s findings are based on several months of calculations and simulations on large, conventional computer workstations. The new architecture, which has yet to be validated by mathematical proofs or tested in the laboratory, relies on a series of simple procedures for repeatedly checking the accuracy of blocks of qubits. This process creates a hierarchy of qubits at various levels of validation.

For instance, to achieve relatively low error probabilities in moderately long computations, 36 qubits would be processed in three levels to arrive at one corrected pair. Only the top-tier, or most accurate, qubits are actually used for computations. The more levels there are, the more reliable the computation will be.

Knill’s methods for detecting and correcting errors rely heavily on teleportation. Teleportation enables scientists to measure how errors have affected a qubit’s value while transferring the stored information to other qubits not yet perturbed by errors. The original qubit’s quantum properties would be teleported to another qubit as the original qubit is measured.

The new architecture allows trade-offs between error rates and computing resource demands. To tolerate 3 percent error rates in components, massive amounts of computing hardware and processing time would be needed, partly because of the “overhead” involved in correcting errors. Fewer resources would be needed if component error rates can be reduced further, Knill’s calculations show.

Media Contact

More Information:

http://www.nist.govAll latest news from the category: Physics and Astronomy

This area deals with the fundamental laws and building blocks of nature and how they interact, the properties and the behavior of matter, and research into space and time and their structures.

innovations-report provides in-depth reports and articles on subjects such as astrophysics, laser technologies, nuclear, quantum, particle and solid-state physics, nanotechnologies, planetary research and findings (Mars, Venus) and developments related to the Hubble Telescope.

Newest articles

Silicon Carbide Innovation Alliance to drive industrial-scale semiconductor work

Known for its ability to withstand extreme environments and high voltages, silicon carbide (SiC) is a semiconducting material made up of silicon and carbon atoms arranged into crystals that is…

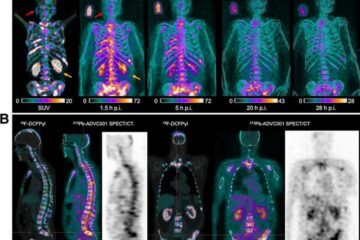

New SPECT/CT technique shows impressive biomarker identification

…offers increased access for prostate cancer patients. A novel SPECT/CT acquisition method can accurately detect radiopharmaceutical biodistribution in a convenient manner for prostate cancer patients, opening the door for more…

How 3D printers can give robots a soft touch

Soft skin coverings and touch sensors have emerged as a promising feature for robots that are both safer and more intuitive for human interaction, but they are expensive and difficult…