Monkeys Consciously Control a Robot Arm Using Only Brain Signals

Appear to “Assimilate” Arm As If it Were Their Own

Researchers at Duke University Medical Center have taught rhesus monkeys to consciously control the movement of a robot arm in real time, using only signals from their brains and visual feedback on a video screen. The scientists said that the animals appeared to operate the robot arm as if it were their own limb.

The scientists and engineers said their achievement represents an important step toward technology that could enable paralyzed people to control “neuroprosthetic” limbs, and even free-roaming “neurorobots” using brain signals.

Importantly, said the neurobiologists, the technology they developed for analyzing brain signals from behaving animals could also greatly improve rehabilitation of people with brain and spinal cord damage from stroke, disease or trauma. By understanding the biological factors that control the brain’s adaptability, they said, clinicians could develop improved drugs and rehabilitation methods for people with such damage.

The advance was reported in an article published online Oct. 13, 2003, in the Public Library of Science (PLoS), by neurobiologists led by Miguel Nicolelis, M.D., who is professor of neurobiology and co-director of the Duke Center for Neuroengineering. Lead author of the paper was Jose Carmena, Ph.D., in the Nicolelis laboratory. Besides Nicolelis, the other senior co-author is Craig Henriquez, Ph.D., associate professor of biomedical engineering in the Pratt School of Engineering, who is also the other center co-director. The research was funded by the Defense Advanced Research Projects Agency and the James S. McDonnell Foundation.

Nicolelis cited numerous researchers at other institutions whose work has been central to the field of brain-machine interfaces and in understanding the brain — and whose insights helped lead to the latest achievement. They include John Chapin, Ph.D., State University of New York Health Science Center, Brooklyn; Eberhard Fetz, Ph.D., University of Washington, Seattle; Jon Kaas, Ph.D., Vanderbilt University; Idan Segev, Ph.D., Hebrew University, Jerusalem, and Karen Moxon, Ph.D., Drexel University.

In previous research, Nicolelis and his colleagues demonstrated a brain-signal recording and analysis system that enabled them to decipher brain signals from owl monkeys in order to control the movement of a robot arm.

The latest work by the Duke researchers is the first to demonstrate that monkeys can learn to use only visual feedback and brain signals, without resort to any muscle movement, to control a mechanical robot arm — including both reaching and grasping movements.

In their experiments, the researchers first implanted an array of microelectrodes — each smaller than the diameter of a human hair — into the frontal and parietal lobes of the brains of two female rhesus macaque monkeys. They implanted 96 electrodes in one animal and 320 in the other. The researchers reported their technology of implanting arrays of hundreds of electrodes and recording from them over long periods in a Sept. 16, 2003, article in the Proceedings of the National Academy of Sciences.

The researchers chose frontal and parietal areas of the brain because they are known to be involved in producing multiple output commands to control complex muscle movement.

The faint signals from the electrode arrays were detected and analyzed by the computer system the researchers had developed to recognize patterns of signals that represented particular movements by an animal’s arm.

In the initial behavioral experiments, the researchers recorded and analyzed the output signals from the monkeys’ brains as the animals were taught to use a joystick to both position a cursor over a target on a video screen and to grasp the joystick with a specified force.

After the animals’ initial training, however, the researchers made the cursor more than a simple display — now incorporating into its movement the dynamics, such as inertia and momentum, of a robot arm functioning in another room. While the animals’ performance initially declined when the robot arm was included in the feedback loop, they quickly learned to allow for these dynamics and became proficient in manipulating the robot-reflecting cursor, found the scientists.

The scientists next removed the joystick, after which the monkeys continued to move their arms in mid-air to manipulate and “grab” the cursor, thus controlling the robot arm.

“The most amazing result, though, was that after only a few days of playing with the robot in this way, the monkey suddenly realized that she didn’t need to move her arm at all,” said Nicolelis. “Her arm muscles went completely quiet, she kept the arm at her side and she controlled the robot arm using only her brain and visual feedback. Our analyses of the brain signals showed that the animal learned to assimilate the robot arm into her brain as if it was her own arm.” Importantly, said Nicolelis, the experiments included both reaching and grasping movements, but derived from the same sets of electrodes.

“We knew that the neurons from which we were recording could encode different kinds of information,” said Nicolelis. “But what was a surprise is that the animal can learn to time the activity of the neurons to basically control different types of parameters sequentially. For example, after using a group of neurons to move the robot to a certain point, these same cells would then produce the force output that the animals need to hold an object. None of us had ever encountered an ability like that.”

Also importantly, said Nicolelis, analysis of the signals from the animals’ brains as they learned revealed that the brain circuitry was actively reorganizing itself to adapt.

“It was extraordinary to see that when we switched the animal from joystick control to brain control, the physiological properties of the brain cells changed immediately. And when we switched the animal back to joystick control the very next day, the properties changed again.

“Such findings tell us that the brain is so amazingly adaptable that it can incorporate an external device into its own ’neuronal space’ as a natural extension of the body,” said Nicolelis. “Actually, we see this every day, when we use any tool, from a pencil to a car. As we learn to use that tool, we incorporate the properties of that tool into our brain, which makes us proficient in using it.” Said Nicolelis, such findings of brain plasticity in mature animals and humans are in sharp contrast to traditional views that only in childhood is the brain plastic enough to allow for such adaptation.

According to Nicolelis, the finding that their brain-machine interface system can work in animals will have direct application to clinical development of neuroprosthetic devices for paralyzed people.

“There is certainly a great deal of science and engineering to be done to develop this technology and to create systems that can be used safely in humans,” he said. “However, the results so far lead us to believe that these brain-machine interfaces hold enormous promise for restoring function to paralyzed people.”

The researchers are already conducting preliminary studies of human subjects, in which they are performing analysis of brain signals to determine whether those signals correlate with those seen in the animal models. They are also exploring techniques to increase the longevity of the electrodes beyond the two years they have currently achieved in animal studies.

Henriquez and the research team’s other biomedical engineers from Duke’s Pratt School of Engineering are also working to miniaturize the components, to create wireless interfaces and to develop different grippers, wrists and other mechanical components of a neuroprosthetic device.

And in their animal studies, the scientists are proceeding to add an additional source of feedback to the system — in the form of a small vibrating device placed on the animal’s side that will tell the animal about another property of the robot.

Beyond the promise of neuroprosthetic devices, said Nicolelis, the technology for recording and analyzing signals from large electrode arrays in the brain will offer an unprecedented insight into brain function and plasticity.

“We have learned in our studies that this approach will offer important insights into how the large-scale circuitry of the brain works,” he said. “Since we have total control of the system, for example, we can change the properties of the robot arm and watch in real time how the brain adapts.”

Media Contact

All latest news from the category: Life Sciences and Chemistry

Articles and reports from the Life Sciences and chemistry area deal with applied and basic research into modern biology, chemistry and human medicine.

Valuable information can be found on a range of life sciences fields including bacteriology, biochemistry, bionics, bioinformatics, biophysics, biotechnology, genetics, geobotany, human biology, marine biology, microbiology, molecular biology, cellular biology, zoology, bioinorganic chemistry, microchemistry and environmental chemistry.

Newest articles

Silicon Carbide Innovation Alliance to drive industrial-scale semiconductor work

Known for its ability to withstand extreme environments and high voltages, silicon carbide (SiC) is a semiconducting material made up of silicon and carbon atoms arranged into crystals that is…

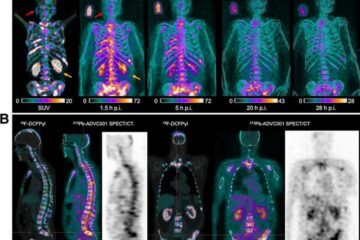

New SPECT/CT technique shows impressive biomarker identification

…offers increased access for prostate cancer patients. A novel SPECT/CT acquisition method can accurately detect radiopharmaceutical biodistribution in a convenient manner for prostate cancer patients, opening the door for more…

How 3D printers can give robots a soft touch

Soft skin coverings and touch sensors have emerged as a promising feature for robots that are both safer and more intuitive for human interaction, but they are expensive and difficult…