The Internet Was Delivered to the Masses; Parallel Computing Is Not Far Behind

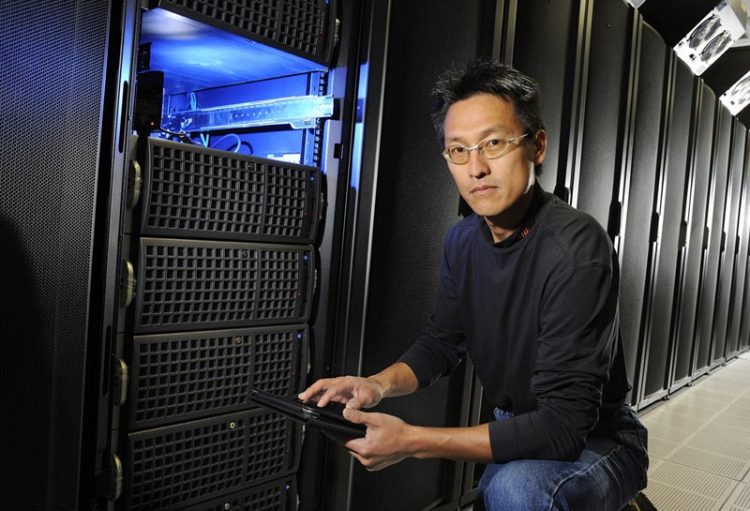

Virginia Tech Virginia Tech College of Engineering Professor Wu Feng has garnered support from NSF, Microsoft, the Air Force, and NIH among others, putting together a way to deliver parallel computing to the masses, much like the Internet's worldwide accessibility.

As he wove together the “parallel computing” aspects from each grant, he was able to tell a much larger, more interconnected story –– one of delivering parallel computing to the masses. In doing so, he has worked to apply this democratization of parallel computing to an area of emerging importance — the promise of personalized medicine.

Microsoft took particular notice of Feng’s leadership in this cutting-edge research and succinctly worked the supercomputing expert’s collaborative ideas into one of its global advertising campaigns, describing Virginia Tech scientists and engineers as “leaders in harnessing supercomputer powers to deliver lifesaving treatments.”

This full-page ad ran this summer in the Washington Post, New York Times, USA Today, Wall Street Journal, Bloomberg Businessweek, United Hemispheres, The Economist, Forbes, Fortune, TIME, Popular Mechanics, and Golf Digest, as well as a host of other venues in Philadelphia, Washington, D.C., and Baltimore.

“Delivering personalized medicine to the masses is just one of the grand challenge problems facing society,” said Feng, the Elizabeth and James E. Turner Fellow in Virginia Tech’s Department of Computer Science http://www.cs.vt.edu/ .

“To accelerate the discovery to such grand challenge problems requires more than the traditional pillars of scientific inquiry, namely theory and experimentation. It requires computing. Computing has become our ‘third pillar’ of scientific inquiry, complementing theory and experimentation. This third pillar can empower researchers to tackle problems previously viewed as infeasible.”

So, if computing faster and more efficiently holds the promise of accelerating discovery and innovation, why can’t we simply build faster and faster computers to tackle these grand challenge problems?

“In short, with the rise of ‘big data’, data is being generated faster than our ability to compute on it,” said Feng. “For instance, next-generation sequencers (NGS) double the amount of data generated every eight to nine months while our computational capability doubles only every 24 months, relative to Moore’s Law. Clearly, tripling our institutional computational resources every eight months is not a sustainable solution… and clearly not a fiscally responsible one either. This is where parallel computing in the cloud comes in.”

As noted by the National Institute of Standards and Technology, cloud computing is “a model for enabling convenient, on-demand network access to a shared pool of configurable computing resources (e.g., networks, servers, storage, applications, and services) that can be rapidly provisioned and released with minimal management effort or service provider interaction.”

“The implicit takeaway here is that the configurable computing resources are hosted and maintained by cloud providers such as Microsoft rather than the institution requiring the computing resources. So, rather than having an institution set-up, maintain, and support an information technology infrastructure that is seldom utilized anywhere near its capacity… and having to triple these resources every eight to nine months to keep up with the data deluge of next-generation sequencing, cloud computing is a viable and more cost effective avenue for accessing necessary computational resources on the fly and then releasing them when not needed,” Feng said.

Whether for traditional high-performance computing or cloud computing, Feng is seeking to transform the way that parallel computing systems and environments are designed and the way that people interact with them.

“My analogy would be the Internet, and how it has transformed the way people interact with information,” Feng added. “We need to make a similar transition with parallel computing, whether with the cloud or with traditional high-performance computing such as supercomputers.”

The groundwork for Feng’s big data research in a “cloud” began in the mid-2000s with a multi-institutional effort to identify missing gene annotations in genomes. This effort combined supercomputers from six U.S. institutions into an ad-hoc cloud and generated 0.5 petabytes of data that could only be stored in Tokyo, Japan.

By combining software Feng developed called mpiBLAST with his ad-hoc ‘big data’ framework called ParaMEDIC: Parallel Metadata Environment for Distributed I/O and Computing, he and his colleagues successfully addressed an important problem in genomics via supercomputing. They were able to reduce the time it took to identify and store missing gene annotations remotely in Japan from more than three years down to two weeks by simply changing the software infrastructure via parallel computing in their ad-hoc cloud.

This work is now being formalized and extended as part of an NSF/Microsoft Computing in the Cloud grant that seeks to commoditize biocomputing in the cloud.

The advent of NGS, on the heels of the above “missing genes” work, created a larger “big data” problem that resulted in an interdisciplinary grant from NVIDIA Foundation to Compute the Cure (for cancer) and the creation of the Open Genomics Engine, which was presented in a talk at the 2012 Graphic Processing Units (GPU) Technology Conference. Other related “big data” biocomputing tools that Feng and his group have created include Burrows-Wheeler Aligner (BWA)-Multicore, SeqInCloud, cuBLASTP, and GPU-RMAP. In turn, this research provided the basis for the recent $1 million NSF-NIH BIGDATA grant on parallel computing for next-generation sequencing in the life sciences.

.

The research from the above grants have provided the impetus for a new center at Virginia Tech — Synergistic Environments for Experimental Computing (SEEC) — co-funded by Virginia Tech’s Institute for Critical Technology and Applied Science http://www.ictas.vt.edu/ (ICTAS), the Office of Information Technology, and the Department of Computer Science. This center seeks to democratize parallel computing by synergistically co-designing algorithms, software, and hardware to accelerate discovery and innovation. As the founder and director of SEEC, Feng is concentrating on five areas for synergistic computing: cyber-physical systems where computing and physical systems intersect; health and life sciences, including the medical sciences; business and financial analytics; cybersecurity; and scientific simulation.

The members of the center represent some 20 different disciplines in seven different colleges and three institutes at Virginia Tech.

Feng, who also holds courtesy appointments in electrical and computer engineering http://www.ece.vt.edu/ and in health sciences http://www.fhs.vt.edu/ , has garnered more than $32 million in research funding during his career. Among his achievements, he founded the Supercomputing in Small Spaces http://sss.cs.vt.edu/ project in 2001 that created Green Destiny, a 240-node supercomputer, an energy efficient machine that would fit inside a closet.

These achievements led to the founding of the Green500, a project that identifies the greenest supercomputers in the world, and more recently, HokieSpeed, a computing resource for science and engineering that debuted as the greenest commodity supercomputer in the U.S. in 2011.

HPCwire named him twice to its Top People to Watch List in 2004 and in 2011.

Contact Information

Lynn Nystrom

Director of News

tansy@vt.edu

Phone: 540-231-4371

Media Contact

All latest news from the category: Information Technology

Here you can find a summary of innovations in the fields of information and data processing and up-to-date developments on IT equipment and hardware.

This area covers topics such as IT services, IT architectures, IT management and telecommunications.

Newest articles

Combatting disruptive ‘noise’ in quantum communication

In a significant milestone for quantum communication technology, an experiment has demonstrated how networks can be leveraged to combat disruptive ‘noise’ in quantum communications. The international effort led by researchers…

Stretchable quantum dot display

Intrinsically stretchable quantum dot-based light-emitting diodes achieved record-breaking performance. A team of South Korean scientists led by Professor KIM Dae-Hyeong of the Center for Nanoparticle Research within the Institute for…

Internet can achieve quantum speed with light saved as sound

Researchers at the University of Copenhagen’s Niels Bohr Institute have developed a new way to create quantum memory: A small drum can store data sent with light in its sonic…