The Brain: Key to a Better Computer

“Today’s computers are wonderful at bookkeeping and solving scientific problems often described by partial differential equations, but they’re horrible at just using common sense, seeing new patterns, dealing with ambiguity and making smart decisions,” said John Wagner, cognitive sciences manager at Sandia National Laboratories.

In contrast, the brain is “proof that you can have a formidable computer that never stops learning, operates on the power of a 20-watt light bulb and can last a hundred years,” he said.

Although brain-inspired computing is in its infancy, Sandia has included it in a long-term research project whose goal is future computer systems. Neuro-inspired computing seeks to develop algorithms that would run on computers that function more like a brain than a conventional computer.

“We’re evaluating what the benefits would be of a system like this and considering what types of devices and architectures would be needed to enable it,” said microsystems researcher Murat Okandan.

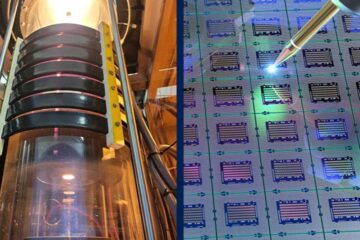

Sandia’s facilities and past research make the laboratories a natural for this work: its Microsystems & Engineering Science Applications (MESA) complex, a fabrication facility that can build massively interconnected computational elements; its computer architecture group and its long history of designing and building supercomputers; strong cognitive neurosciences research, with expertise in such areas as brain-inspired algorithms; and its decades of work on nationally important problems, Wagner said.

New technology often is spurred by a particular need. Early conventional computing grew from the need for neutron diffusion simulations and weather prediction. Today, big data problems and remote autonomous and semiautonomous systems need far more computational power and better energy efficiency.

Neuro-inspired computers would be ideal for robots, remote sensors

Neuro-inspired computers would be ideal for operating such systems as unmanned aerial vehicles, robots and remote sensors, and solving big data problems, such as those the cyber world faces and analyzing transactions whizzing around the world, “looking at what’s going where and for what reason,” Okandan said.

Such computers would be able to detect patterns and anomalies, sensing what fits and what doesn’t. Perhaps the computer wouldn’t find the entire answer, but could wade through enormous amounts of data to point a human analyst in the right direction, Okandan said.

“If you do conventional computing, you are doing exact computations and exact computations only. If you’re looking at neurocomputation, you are looking at history, or memories in your sort of innate way of looking at them, then making predictions on what’s going to happen next,” he said. “That’s a very different realm.”

Modern computers are largely calculating machines with a central processing unit and memory that stores both a program and data. They take a command from the program and data from the memory to execute the command, one step at a time, no matter how fast they run. Parallel and multicore computers can do more than one thing at a time but still use the same basic approach and remain very far removed from the way the brain routinely handles multiple problems concurrently.

The architecture of neuro-inspired computers would be fundamentally different, uniting processing and storage in a network architecture “so the pieces that are processing the data are the same pieces that are storing the data, and the data will be processed with all nodes functioning concurrently,” Wagner said. “It won’t be a serial step-by-step process; it’ll be this network processing everything all at the same time. So it will be very efficient and very quick.”

Unlike today’s computers, neuro-inspired computers would inherently use the critical notion of time. “The things that you represent are not just static shots, but they are preceded by something and there’s usually something that comes after them,” creating episodic memory that links what happens when. This requires massive interconnectivity and a unique way of encoding information in the activity of the system itself, Okandan said.

More neurosciences research opens more possibilities for brain-inspired computing

Each neuron in a neural structure can have connections coming in from about 10,000 neurons, which in turn can connect to 10,000 other neurons in a dynamic way. Conventional computer transistors, on the other hand, connect on average to four other transistors in a static pattern.

Computer design has drawn from neuroscience before, but an explosion in neuroscience research in recent years opens more possibilities. While it’s far from a complete picture, Okandan said what’s known offers “more guidance in terms of how neural systems might be representing data and processing information” and clues about replicating those tasks in a different structure to address problems impossible to solve on today’s systems.

Brain-inspired computing isn’t the same as artificial intelligence, although a broad definition of artificial intelligence could encompass it.

“Where I think brain-inspired computing can start differentiating itself is where it really truly tries to take inspiration from biosystems, which have evolved over generations to be incredibly good at what they do and very robust against a component failure. They are very energy efficient and very good at dealing with real-world situations. Our current computers are very energy inefficient, they are very failure-prone due to components failing and they can’t make sense of complex data sets,” Okandan said.

Computers today do required computations without any sense of what the data is — it’s just a representation chosen by a programmer.

“Whereas if you think about neuro-inspired computing systems, the structure itself will have an internal representation of the datastream that it’s receiving and previous history that it’s seen, so ideally it will be able to make predictions on what the future states of that datastream should be, and have a sense for what the information represents.” Okandan said.

He estimates a project dedicated to brain-inspired computing will develop early examples of a new architecture in the first several years, but said higher levels of complexity could take decades, even with the many efforts around the world working toward the same goal.

“The ultimate question is, ‘What are the physical things in the biological system that let you think and act, what’s the core essence of intelligence and thought?’ That might take just a bit longer,” he said.

For more information, visit the 2014 Neuro-Inspired Computational Elements Workshop website.

Sandia National Laboratories is a multi-program laboratory operated by Sandia Corporation, a wholly owned subsidiary of Lockheed Martin Corp., for the U.S. Department of Energy’s National Nuclear Security Administration. With main facilities in Albuquerque, N.M., and Livermore, Calif., Sandia has major R&D responsibilities in national security, energy and environmental technologies and economic competitiveness.

Media Contact

More Information:

http://www.sandia.govAll latest news from the category: Information Technology

Here you can find a summary of innovations in the fields of information and data processing and up-to-date developments on IT equipment and hardware.

This area covers topics such as IT services, IT architectures, IT management and telecommunications.

Newest articles

Silicon Carbide Innovation Alliance to drive industrial-scale semiconductor work

Known for its ability to withstand extreme environments and high voltages, silicon carbide (SiC) is a semiconducting material made up of silicon and carbon atoms arranged into crystals that is…

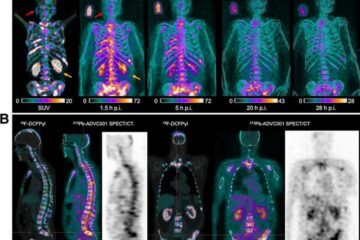

New SPECT/CT technique shows impressive biomarker identification

…offers increased access for prostate cancer patients. A novel SPECT/CT acquisition method can accurately detect radiopharmaceutical biodistribution in a convenient manner for prostate cancer patients, opening the door for more…

How 3D printers can give robots a soft touch

Soft skin coverings and touch sensors have emerged as a promising feature for robots that are both safer and more intuitive for human interaction, but they are expensive and difficult…