Sandia-designed supercomputer ranked world’s most efficient in two of six categories

Clear skies for Red Storm

A new series of measurements — the next step in evolution of criteria to determine more accurately the efficiency of supercomputers — has rated Sandia National Laboratories’ Red Storm computer the best in the world in two of six new categories, and very high in two other important categories.

Red Storm had previously been judged 6th fastest in the world on the old but more commonly accepted Linpack test.

The two first-place benchmarks measure the efficiency of keeping track of data (called random access memory), and of communicating data between processors. This is the equivalent of how well a good basketball team works its offense, rapidly passing the ball to score against an opponent.

Red Storm has already modeled how much explosive power it would take to destroy an asteroid targeting earth, how a raging fire would affect critical components in a variety of devices, and how changes in the composition of Earth’s atmosphere affect it. These models are in addition to the basic stockpile calculations the machine is designed to address.

Sandia is a National Nuclear Security Administration (NNSA) laboratory.

An unusual feature of Red Storm’s architecture is that the computer can do both classified and unclassified work with the throw of a few switches. The transfer does not require any movement of discs and is secure. There are no hard drives in any Red Storm processing cabinets. A part or even the whole of the machine can be temporarily devoted to a science problem, and cross over to do national security work.

The capability of the machine to put its entire computing weight behind single large jobs enabled one Sandia researcher to get an entire year’s worth of calculations done in a month.

Red Storm’s architecture was designed by Sandia computer specialists Jim Tomkins and Bill Camp. The pair’s work has helped Sandia partner Cray Inc. sell 15 copies of the supercomputer in various sizes to U.S. government agencies and universities, and customers in Canada, England, Switzerland, and Japan.

Cray holds licenses from Sandia to reproduce Red Storm architecture and some system software, says Tomkins. “The operating system was written here, but the IO [input-output] is Cray’s,” he says.

How to measure a supercomputer’s performance

In the early 1990s, supercomputer manufacturers distinguished the capabilities of their products by announcing Theoretical Peak numbers. This figure represented how fast a computer with many chips in parallel circuits could run if all processors worked perfectly and in unison. The number was best considered a hopeful estimate.

Next came the Linpack benchmark, which provided a real but relatively simple series of algorithms for a supercomputer to solve. Since 1993, those interested in supercomputers watched for the new Linpack numbers, published every six months, to determine and rank the 500 fastest computers in the world. For several years, the fastest was the Sandia ASCI Red supercomputer.

Most recently, the limitations of this approach have encouraged the Linpack founders, in conjunction with supercomputer manufacturers, to develop still more realistic tests. These indicate how well supercomputers handle essential functions like the passing between processors of large amounts of data necessary to solve real-world problems.

It is in this revised series of tests, called the High Performance Computing Challenge (HPCC) test suite, that Sandia’s Red Storm supercomputer — funded by NNSA’s Advanced Simulation and Computing program — has done extremely well.

“Suppose your computer is modeling a car crash,” says Sandia Computing and Network Services director Rob Leland, offering an example of a complicated problem. “You’re doing calculations about when the windshield is going to break. And then the hood goes through it. This is a very discontinuous event. Out of the blue, something else enters the picture dramatically.

“This is the fundamental problem that Sandia solved in Red Storm: how to monitor what’s coming at you in every stage of your calculations,” he says. “You need very good communications infrastructure, because the information is concise, very intense. You need a lot of bandwidth and low latency to be able to transmit a lot of information with minimum delays. And because the incoming information is very unpredictable, you have to be aware in every direction.”

To David Womble, acting director of Computation, Computers, and Math at Sandia, “The question is [similar to] how much traffic can you move how fast through crowded city streets.” Red Storm, he says, does so well because it has “a balance that doesn’t exist in other machines between communication bandwidth [the ability of a processor to get data it needs from anywhere in the machine quickly] and floating point computation [how fast each processor can do the additions, multiplications and other operations it needs to do in solving problems].”

More technically, Red Storm posted 1.8 TB/sec (1.8 trillion bytes per second) on one HPCC test: an interconnect bandwidth challenge called PTRANS, for parallel matrix transpose. This test, requiring repeated “reads,” “stores,” and communications among processors, is a measure of the total communication capacity of the internal interconnect. Sandia’s achievement in this category represents 40 times more communications power per teraflop (trillion floating point operations per second) than the PTRANS result posted by IBM’s Blue Gene system that has more than 10 times as many processors.

Red Storm is the first computer to surpass the 1 terabyte-per-second (1 TB/sec) performance mark measuring communications among processors — a measure that indicates the capacity of the network to communicate when dealing with the most complex situations.

The “random access” benchmark checks performance in moving individual data rather than large arrays of data. Moving individual data quickly and well means that the computer can handle chaotic situations efficiently.

Red Storm also did very well in categories it did not win, finishing second in the world behind Blue Gene in fft (“Fast Fourier Transform,” a method of transforming data into frequencies or logarithmic forms easier to work with); and third behind Purple and Blue Gene in the “streams” category (total memory bandwidth measurement). Higher memory bandwidth helps prevent processors from being starved for data.

The two remaining tests involve the effectiveness of individual chips, rather than overall computer design.

In a normalization of benchmarks, which involves dividing them by the Linpack speed, Tomkins found that Red Storm had the best ratio. By this measurement, Red Storm — of all the supercomputers — was best balanced to do real work.

Media Contact

More Information:

http://www.sandia.govAll latest news from the category: Information Technology

Here you can find a summary of innovations in the fields of information and data processing and up-to-date developments on IT equipment and hardware.

This area covers topics such as IT services, IT architectures, IT management and telecommunications.

Newest articles

Combatting disruptive ‘noise’ in quantum communication

In a significant milestone for quantum communication technology, an experiment has demonstrated how networks can be leveraged to combat disruptive ‘noise’ in quantum communications. The international effort led by researchers…

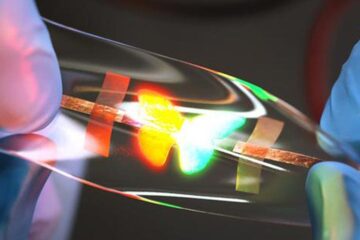

Stretchable quantum dot display

Intrinsically stretchable quantum dot-based light-emitting diodes achieved record-breaking performance. A team of South Korean scientists led by Professor KIM Dae-Hyeong of the Center for Nanoparticle Research within the Institute for…

Internet can achieve quantum speed with light saved as sound

Researchers at the University of Copenhagen’s Niels Bohr Institute have developed a new way to create quantum memory: A small drum can store data sent with light in its sonic…