Supercomputers to enable safter, more efficient oil drilling

Oil companies could soon harness the power of distant supercomputers to tackle problems such as where to place equipment and how to clean up oil spills.

For decades, the industry has used computers to maximize profit and minimize environmental impact, explained Tahsin Kurc, assistant professor of biomedical informatics at Ohio State University.

Typically, companies take seismic measurements of an oil reservoir and simulate drilling scenarios on a local computer. Now Kurc and his colleagues are developing a software system and related techniques to let supercomputers at different locations share the workload. The system runs simulations faster and in much greater detail – and enables analysis of very large amounts of data.

The scientists are employing the same tools and techniques that they use to connect computing resources in biomedical research. Whether they are working with images from digitized microscopes or MRI machines, their focus is on creating software systems that pull important information from the available data.

From that perspective, a seismic map of an oilfield isn’t that different than a brain scan, Kurc said. Both involve complex analyses of large amounts of data.

In an oilfield, rock, water, oil and gas mingle in fluid pools underground that are hard to discern from the surface, and seismic measurements don’t tell the whole story.

Yet oil companies must couple those measurements to a computer model of how they can utilize the reservoir, so that they can accurately predict its output for years to come. And they can’t even be certain that they’re using exactly the right model for a field’s particular geology.

“You never know the exact properties of the reservoir, so you have to make some guesses,” Kurc said. “You have a lot of choices of what to do, so you want to run a lot of simulations.”

The same problems arise when a company wants to minimize its effects on the environment around the reservoir, or track the path of an oil spill.

Each simulation can require hours or even days on a PC, and generate tens of gigabytes (billions of bytes) of data. Oil companies have to greatly simplify their computer models to handle such large datasets.

Kurc and his Ohio State colleagues – Joel Saltz, professor and chair of the Department of Biomedical Informatics, assistant professor Umit Catalyurek, research programmer Benjamin Rutt and graduate student Xi Zhang – are enabling technologies to spread that data around supercomputers at different institutions. In a recent issue of the journal Concurrency and Computation: Practice and Experience, they described a software program called DataCutter that portions out data analysis tasks among networked computer systems.

This project is part of a larger collaboration with researchers at the University of Texas at Austin, Oregon State University, University of Maryland, and Rutgers University. The institutions joined together to utilize the TeraGrid network, which links supercomputer centers around the country for large-scale studies.

Programs like DataCutter are called “middleware,” because they link different software components. The goal, Kurc said, is to design middleware that works with a wide range of applications.

“We try to come up with commonalities between the applications in that class,” he said. “Do they have a similar way of querying the data, for instance? Then we develop algorithms and tools that will support that commonality.”

DataCutter coordinates how data is processed on the network, and filters the data for the end user.

The researchers tested DataCutter with an oilfield simulation program developed at the University of Texas at Austin. They ran three different simulations over the TeraGrid: one to assess the economic value of an oilfield, one to locate sites of bypassed oil, and one to evaluate different production strategies – such as the placement of pumps and outlets in an oil field.

The source data came from simulation-based oilfield studies at the University of Texas at Austin. That data and the output data from the simulations were spread around three sites: the San Diego Supercomputer Center, the University of Maryland, and Ohio State.

Using distributed computers, they were able to reduce the execution time of one simulation from days to hours, and another from hours to several minutes. But Kurc feels that speed isn’t the only benefit that oil companies would get from doing their simulations on computing infrastructures such as TeraGrid. They would also have access to geological models and datasets at member institutions, which could boost the accuracy of their simulations.

The National Science Foundation funded this project to make publicly available, open-source software products for industry and academia, so potential users can download the software through an open source license and use it in their projects.

Media Contact

More Information:

http://www.osu.eduAll latest news from the category: Information Technology

Here you can find a summary of innovations in the fields of information and data processing and up-to-date developments on IT equipment and hardware.

This area covers topics such as IT services, IT architectures, IT management and telecommunications.

Newest articles

Properties of new materials for microchips

… can now be measured well. Reseachers of Delft University of Technology demonstrated measuring performance properties of ultrathin silicon membranes. Making ever smaller and more powerful chips requires new ultrathin…

Floating solar’s potential

… to support sustainable development by addressing climate, water, and energy goals holistically. A new study published this week in Nature Energy raises the potential for floating solar photovoltaics (FPV)…

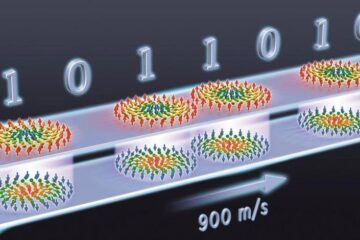

Skyrmions move at record speeds

… a step towards the computing of the future. An international research team led by scientists from the CNRS1 has discovered that the magnetic nanobubbles2 known as skyrmions can be…