New image sensor will show what the eyes see, and a camera cannot

Software behind the technology already finding its way into photo editing

Researchers are developing new technologies that may give robots the visual-sensing edge they need to monitor dimly lit airports, pilot vehicles in extreme weather and direct unmanned combat vehicles.

The researchers intend to create an imaging chip that defeats the harmful effects of arbitrary illumination, allowing robotic vision to leave the controlled lighting of a laboratory and enter the erratic lighting of the natural world. In a first step, the researchers have now developed software that simulates the chip circuitry, a program that alone is capable of uncovering hidden detail in existing images.

Designed by robot-vision expert, Vladimir Brajovic, and his colleagues at Intrigue Technologies, Inc.–a spin-off of the team’s Carnegie Mellon University research–the new optical device will work more like a retina than a standard imaging sensor.

Just as neurons in the eye process information before sending signals to the brain, the pixels of the new device will “talk” to each other about what they see. The pixels will use the information to modify their behavior and adapt to lighting, ultimately gathering visual information even under adverse conditions.

Through an online demonstration, the simulator software plug-in, dubbed Shadow Illuminator , has processed more than 80,000 pictures from around the world. By balancing exposure across images, clearing away “noise” and improving contrast, the software revealed missing textures, exposed concealed individuals and even uncovered obscured features in medical x-ray film.

This new approach counters a persistent problem for computer-vision cameras – when capturing naturally lit scenes, a camera can be as much of an obstacle as it is a tool. Despite careful attention to shutter speeds and other settings, the brightly illuminated parts of the image are often washed out, and shadowy parts of the image are completely black. The mathematical churning behind that process will allow pixels to “perceive” reflectance–a surface property that determines how much incoming light reflects off an object, light that a camera can capture.

Light illuminating an object helps reveal reflectance to a camera or an eye. However, illumination is a necessary evil, says Brajovic. “Most of the problems in robotic imaging can be traced back to having too much light in some parts of the image and too little in others,” he says, “and yet we need light to reveal the objects in a field of view.”

To produce images that appear uniformly illuminated, the researchers created a system that widens the range of light intensities a sensor can accommodate. According to Brajovic, limitations in standard imaging sensors have hindered many vision applications, such as security and surveillance, intelligent transportation systems, and defense systems – not to mention ruining a few cherished family photos.

The researchers hope the new technology will yield high-quality image data, despite natural lighting, and ultimately improve the reliability of machine-vision systems, such as those for biometric identification, enhanced X-ray diagnostics and space exploration imagers.

Additional comments from the researcher:

“The washed out and underexposed images captured by today’s digital cameras are simply too confusing for machines to interpret, ultimately leading to failure of their vision systems in many critical applications.” – Vladimir Brajovic, Carnegie Mellon University and Intrigue Technologies, Inc.

“Often, when we take a picture with a digital or film camera, we are disappointed that many details we remember seeing appear in the image buried in deep shadows or washed out in overexposed regions. This is because our eyes have a built-in mechanism to adapt to local illumination conditions, while our cameras don’t. Because of this camera deficiency, robot vision often fails.” – Vladimir Brajovic

Media Contact

More Information:

http://www.nsf.govAll latest news from the category: Information Technology

Here you can find a summary of innovations in the fields of information and data processing and up-to-date developments on IT equipment and hardware.

This area covers topics such as IT services, IT architectures, IT management and telecommunications.

Newest articles

Bringing bio-inspired robots to life

Nebraska researcher Eric Markvicka gets NSF CAREER Award to pursue manufacture of novel materials for soft robotics and stretchable electronics. Engineers are increasingly eager to develop robots that mimic the…

Bella moths use poison to attract mates

Scientists are closer to finding out how. Pyrrolizidine alkaloids are as bitter and toxic as they are hard to pronounce. They’re produced by several different types of plants and are…

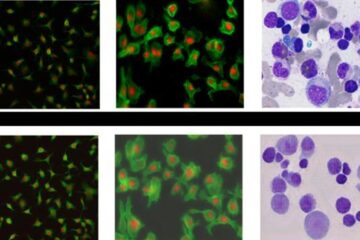

AI tool creates ‘synthetic’ images of cells

…for enhanced microscopy analysis. Observing individual cells through microscopes can reveal a range of important cell biological phenomena that frequently play a role in human diseases, but the process of…