Managing the data on the worldwide Grid

Did you just load that interesting new software on your PC? Then wondered where all your storage space has gone, while at the same time your PC seems to have ground to a halt? Think of the same predicament but magnified, not thousands but millions of times. Now you begin to see the problems confronting the world’s research community in cutting-edge research projects. And in particular, the challenges facing the DATAGRID project.

Most challenging processing applications on Earth

The project partners in DATAGRID deal with what are probably the most demanding data processing applications on Earth. Some 21 partners, among the major research institutions in Europe, are confronted with increasingly data-hungry applications of the type that even super-computers cannot handle.

The EC launched already in 2000 the Grid series of projects as a solution. A means of interconnecting all the national research networks within and without Europe, in order to share data processing and storage capacity for these most challenging data processing applications of all.

DATAGRID is part of that solution. The project focuses on the middleware that is essential to handling the enormous amounts of data involved. The DATAGRID middleware ensures that the right processing resources are used, wherever they may be on the Grid, in an efficient and reliable way. Its task is to organise and integrate the disparate computational resources of the Grid into a coherent whole.

The partners in DATAGRID include world-leading European research institutions such as CERN, CNRS in France, INFN in Italy and PPARC in the UK. They have been joined in their endeavours by industrial giants such as IBM and other European IT companies such as Datamat in Italy and CSSI in France.

Quasi-production processing capability

Although project completion is due not until the end of March 2004, the partners have already largely achieved their objectives. This is no mean feat according to the DATAGRID project leader Fabrizio Gagliardi from the European Organisation for Nuclear Research. “Our most important single achievement is that we have successfully put together a real testbed, on which we can demonstrate data processing applications at near-production quality.”

“We have applications in three domains where we show a quasi-production capability – particle physics, bio-information and earth observation,” he continues. “All require processing and analysis of very large amounts of data. What do I mean by this? I mean making available fifteen terabytes of online data storage, working, at any time and offering the processing capability of over two thousand CPUs.”

Particle physics provides a good illustration of the data needs of such applications. To explore fundamental particles and the forces between them, high-energy physics applications are expected soon to be producing as much as 10 Petabytes of data per year. Dozens of universities around the world will need to analyse this data.

In the bio-sciences, 10 years ago biologists were happy if they could simulate a single small molecule on a computer. Now they expect to simulate thousands of molecular drug candidates to see how they interact with specific proteins. And unlocking the secrets of the human genome would be impossible without the computerised analysis of massive amounts of data, including the sequence of the three billion chemical units that comprise human DNA, the genetic blueprint for our species.

In space research, scientists keep track of the level of atmospheric ozone with satellite observations. For this task alone they download, from space to ground, about 100 Gigabytes of raw images per day. And in disaster response scenarios, governments and international organisations such as the UN and the World Bank will be greatly aided by the Grid’s potential for sharing archives and coordinating responses to the devastation caused by floods, fires and earthquakes.

A worldwide data capability

Like the Grid itself, the DATAGRID testbed is not limited to Europe alone. Its capabilities have been successfully tested around the world, at some 40 sites from Europe to North America, to Russia, to the Far East and to the Antipodes. Its sister project DATATAG has broken the world record for data transmission capacity, reaching the hitherto unheard of speed of 5.44 Gigabits per second.

DATAGRID middleware is based on an Open Source software policy. Once the project is completed the source code will be made available for anyone to download, to develop for themselves and share their results with the world software community.

As part of their software policy the partners have also invested considerable resources in education. Training in the use of the software, practical tutorials in the use of the testbed and road-shows have been given in locations as diverse as Pakistan, Taiwan and Poland, as well as within the EU. So successful was the Polish road-show, for example, that the project has been included into the curriculum of the University of Krakow computer sciences faculty.

Real challenge still to come

Challenging as its tasks may be now, the real test for the Grid is still to come. Starting in 2007, the new particle accelerator at CERN, the Large Hadron Collider (LHC), will come on stream. The LHC will be the largest scientific instrument in the world and will require all the abilities of the Grid to support the huge amounts of data it will generate.

Scientists around the world will be clamouring for access to the data from the LHC. The 10 Petabytes of data per year it is expected to generate is equivalent to more than 1,000 times the amount of information printed in book form every year around the world, and nearly 10 per cent of all information that humans produce on the planet each year.

The Grid network is the only resource capable of processing this immense quantity of information. And the partners in DATAGRID are already involved in forward planning for a successor project EGEE (Enabling Grids for E-science in Europe, www.eu-egee.org), to develop the 24/7 capabilities and call-centre backup that will be necessary to support it.

Media Contact

More Information:

http://istresults.cordis.lu/All latest news from the category: Information Technology

Here you can find a summary of innovations in the fields of information and data processing and up-to-date developments on IT equipment and hardware.

This area covers topics such as IT services, IT architectures, IT management and telecommunications.

Newest articles

Superradiant atoms could push the boundaries of how precisely time can be measured

Superradiant atoms can help us measure time more precisely than ever. In a new study, researchers from the University of Copenhagen present a new method for measuring the time interval,…

Ion thermoelectric conversion devices for near room temperature

The electrode sheet of the thermoelectric device consists of ionic hydrogel, which is sandwiched between the electrodes to form, and the Prussian blue on the electrode undergoes a redox reaction…

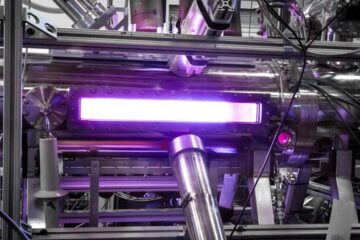

Zap Energy achieves 37-million-degree temperatures in a compact device

New publication reports record electron temperatures for a small-scale, sheared-flow-stabilized Z-pinch fusion device. In the nine decades since humans first produced fusion reactions, only a few fusion technologies have demonstrated…