Machine translation for the most part targets Internet and technical texts

At the invitation of the Department of Artificial Intelligence, Mike Dillinger has been giving a course on paraphrasing and text mining at the School of Computing. Dillinger considers that without clean and clear texts machine translation will not work well and that, despite technological advances, there will always be a demand for human translators to render legal or literary texts.

Machine translation, he adds, for the most part targets Internet and technical texts. MT’s Internet bias means training web content creators to assure that the documents are machine translatable.

– As an acclaimed expert in machine translation, how would you define the state of the art in this discipline?

The state of the art is rapidly changing. A far-reaching new approach was introduced fifteen to twenty years ago. At the time we faced a two-sided problem. First, it took a long time and a lot of money to develop the grammatical rules required to analyse the original sentence and the “transfer” or translation rules. Second, it looked to be impossible to manually account for the vast array of words and sentence types in documents.

The new approach uses statistical techniques to identify qualitatively simpler rules. This it does swiftly, automatically and on a massive scale, covering much more of the language. Similar techniques are used to identify terms and their possible translations. These are huge advances! Before system development was very much a cottage industry; now they are mass produced. Today’s research aims to increase the qualitative complexity of the rules to better reflect syntactic structures and aspects of meaning. We are now exploiting the qualitative advances of this approach.

– Machine translation systems have been in use since the 1970s, is this technology now mature?

If maturity means for use in industrial applications, the answer is definitely yes. MT has been widely used by top-ranking industrial and military institutions for 30 years. The European Community, Ford, SAP, Symantec, the US Armed Forces and many other organizations use MT every day. If maturity means use by the general public to enter a random sentence for translation, I would say, just as definitely, no. Like all technology, machine translation has its limits. You don’t expect a Mercedes to run well in the snow or sand: where it performs best is on a dry, surfaced road. Neither do you expect a Formula 1 car to win a race using ordinary petrol or alcohol, it needs special fuel. Unfortunately, very often people expect a perfect translation of a not very clear or error-riddled text. For the time being at least, without clean and correct texts, machine translation will not work properly.

– Do you think society understands MT?

Not at all! It’s something I come across all the time. A lot of people think that “translation” is being able to tell what the author means, even if he or she has not expressed himself or herself clearly and correctly. Therefore, many have great expectations about what a translation system will be able to do. This is why they are always disappointed. On the other hand, those of us working in MT have to make a big effort to get society to better understand what use it is and when it works well: this is the mandate of the association I chair.

– What is MT about? Developing programs, translation systems, computerized translation, manufacturing electronic dictionaries? How exactly would you define this discipline?

MT is concerned with building computerized translation systems. Of course, this includes building electronic dictionaries, grammars, databases of word co-occurrences and other linguistic resources. But it also involves developing automatic translation evaluation processes, input text “cleaning” and analysis processes, and processes for guaranteeing that everything will run smoothly when a 300,000 page translation order arrives. As these are all very different processes and components, MT requires the cooperation of linguists, programmers and engineers.

– What are the stages of the machine translation process?

1. Document preparation. This is arguably the most important stage, because you have to assure that the sentences of each document are understandable and correct.

2. Adaptation of the translation system. Just like a human translator, the machine translation system needs information about all the words it will come across in the documents. It can be taught new words through a process known as customization.

3. Document translation. Each document format, like Word, pdf or HTML, has many different features, apart from the sentences that actually have to be translated. This stage separates the content from the wrapping as it were.

4. Translation verification. Quality control is very important for human and machine translators. Neither words nor sentences have just one meaning, and they are very easy to misinterpret.

5. Document distribution. This stage is more complex than is generally thought. When you receive 10,000 documents to be translated to 10 different languages, checking that they were all translated, putting them all in the right order without mixing up languages, etc., takes a lot of organizing.

– Is this technology a threat to human translators? Do you really think it creates jobs?

It is not a threat at all! MT takes the most routine work out of translators’ hands so that they can apply their expertise to more difficult tasks. We will always need human translators for more complex texts legal and literary texts. MT today is mostly applied to situations where there is no human participation. It would be cruel even to have people translate e-mails, chats, SMS messages and random web pages. The required text throughput and translation speed is so huge that it would be excruciating for a human being. It is a question of scale: an average human translator translates from 8 to 10 pages per day, whereas, on the web scale, 8 to 10 pages per second would be an extremely low rate. The adoption of new technologies, especially in a global economy, seldom boosts job creation. What it does do is open up an increasingly clear divide between low-skilled routine jobs and specialized occupations.

– Is the deployment of this technology a technical or social problem?

First and foremost it is a social engineering problem because people have to change their behaviour and the way they see things. The MT process reproduces exactly the same stages as human translation, except for two key differences:

a) In translation systems, you have to be very, very careful about the wording. Human translators apply their technical knowledge (if any) to make up for incorrect wording, but machine translation systems have no such knowledge: they reproduce all too faithfully the mistakes in the original/source text. It is hard to get them to translate more accurately, but there are now extremely helpful automatic checking tools. Symantec is a recent example that uses an automatic checker and a translation system to achieve extremely fast and very good results.

b) Translation systems have to handle a lot of translated documents. What happens if an organization receives 5,000 instead of the customary 50 translated documents per week? Automating the translation process ends up uncovering problems with other parts of document handling.

– You mentioned the British National Corpus that includes a cross section of texts that are representative of the English language. It contains 15 million different terms, whereas machine translation dictionaries only contain 300,000 entries. How can this barrier to the construction of an acceptable MT system for society be overcome?

This collection of over 100 million English words is a good mirror of macro language features. One is that we use a great deal of words. However, word frequency is extremely variable: of 15 million terms 70% are seldom used! To overcome the variability in vocabulary usage barrier, we now use the most common words to create a core system to which 5,000 to 10,000 customer-specific words are added. This is reasonably successful. For web applications, however, this simply does not work. Even the best systems are missing literally millions of words, plus the fact that new words are invented every day. At least three remedies are applied at present: ask the user to “try again”, ask the user to enter a synonym, and automatically or semiautomatically build synonym databases. As I see it, we will have to develop web content author guidance systems, such as have already been developed for technical documents. There are strong economic arguments for moving in that direction.

– The Association for Machine Translation in the Americas that you chair is organizing the AMTA 2008 conference to be held in Hawaii next October, what innovations does conference have in store?

There is always something! Come and see! One difference this year is that several groups are holding conferences together. AMTA, the International Workshop of Spoken Language Translation (IWSLT), a workshop by the US government agency NIST about how to evaluate translation evaluation methods, a Localization Industry Standards Association meeting attracting representatives from large corporations, and another group of Empirical Methods in Natural Language Processing (EMNLP) researchers will all be at the same hotel in the same week. Finally, as it is to be held in Hawaii, our colleagues from Asia will be there to add an even more international edge. For more information, see conference web site.

Media Contact

All latest news from the category: Information Technology

Here you can find a summary of innovations in the fields of information and data processing and up-to-date developments on IT equipment and hardware.

This area covers topics such as IT services, IT architectures, IT management and telecommunications.

Newest articles

First-ever combined heart pump and pig kidney transplant

…gives new hope to patient with terminal illness. Surgeons at NYU Langone Health performed the first-ever combined mechanical heart pump and gene-edited pig kidney transplant surgery in a 54-year-old woman…

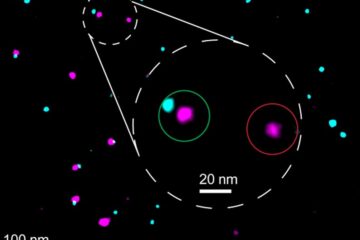

Biophysics: Testing how well biomarkers work

LMU researchers have developed a method to determine how reliably target proteins can be labeled using super-resolution fluorescence microscopy. Modern microscopy techniques make it possible to examine the inner workings…

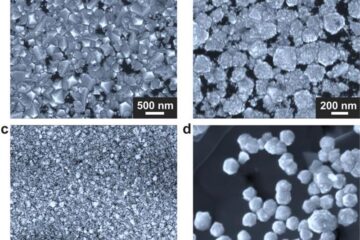

Making diamonds at ambient pressure

Scientists develop novel liquid metal alloy system to synthesize diamond under moderate conditions. Did you know that 99% of synthetic diamonds are currently produced using high-pressure and high-temperature (HPHT) methods?[2]…