Piecing together the next generation of cognitive robots

Making robots more responsive would allow them to be used in a greater variety of sophisticated tasks in the manufacturing and service sectors. Such robots could be used as home helpers and caregivers, for example.

As research into artificial cognitive systems (ACS) has progressed in recent years it has grown into a highly fragmented field. Some researchers and teams have concentrated on machine vision, others on spatial cognition, and on human-robot interaction, among many other disciplines.

All have made progress, but, as the EU-funded project CoSy (Cognitive Systems for Cognitive Assistants) has shown, by working together the researchers can make even more advances in the field.

“We have brought together one of the broadest and most varied teams of researchers in this field,” says Geert-Jan Kruijff, the CoSy project manager at the German Research Centre for Artificial Intelligence. “This has resulted in an ACS architecture that integrates multiple cognitive functions to create robots that are more self-aware, understand their environment and can better interact with humans.”

The CoSy ACS is indeed greater than the sum of its parts. It incorporates a range of technologies from a design for cognitive architecture, spatial cognition, human-robot interaction and situated dialogue processing, to developmental models of visual processing.

“We have learnt how to put the pieces of ACS together, rather than just studying them separately,” adds Jeremy Wyatt, one of the project managers at the UK’s University of Birmingham.

The researchers have made the ACS architecture toolkit they developed available under an open source license. They want to encourage further research. The toolkit has already sparked several spin-off initiatives.

Overcoming the integration challenge

“The integration of different components in an ACS is one of the greatest challenges in robotics,” Kruijff says. “Getting robots to understand their environment from visual inputs and to interact with humans from spoken commands and relate what is said to their environment is enormously complex.”

Because of the complexity most robots developed to date have tended to be reactive. They simply react to their environment rather than act in it autonomously. Similar to a beetle that scuttles away when prodded, many mobile robots back off when they collide with an object, but have little self-awareness or understanding of the space around them and what they can do there.

In comparison, a demonstrator called the Explorer developed by the CoSy team has a more human-like understanding of its environment. Explorer can even talk about its surroundings with a human.

Instead of using just geometric data to create a map of its surroundings, the Explorer also incorporates qualitative, topographical information. Through interaction with humans it can then learn to recognise objects, spaces and their uses. For example, if it sees a coffee machine it may reason that it is in a kitchen. If it sees a sofa it may conclude it is in a living room.

“The robot sees a room much as humans see it because it has a conceptual understanding of space,” Kruijff notes.

Another demonstrator, called the PlayMate, applied machine vision and spatial recognition in a different context. PlayMate uses a robotic arm to manipulate objects in response to human instructions.

In Wyatt’s view the development of machine vision and its integration with other ACS components is still a big obstacle to creating more advanced robots, especially if the goal is to replicate human sight and awareness.

“Don’t underestimate how sophisticated we are…,” he says. “We don’t realise how agile our brains are at interpreting what we see. You can pick out colours from a scene, look at a bottle of water, a packet of cornflakes, or a coffee mug and know what activities each of them allows. You recognise them, see where to grasp them, and how to manipulate them, and you do it all seamlessly. We are still so very, very far from doing that with robots.”

Robotic ‘gofers’

Fortunately, replicating human-like intelligence and awareness, if it is indeed possible, is not necessary when creating robots that are useful to humans.

Kruijff foresees robots akin to those developed in the CoSy project becoming an everyday sight over the coming years in what he describes as ‘gofer scenarios’. Already some robots with a lower level of intelligence are being used to bring medicines to patients in hospitals and could be used to transport documents around office buildings.

Robotic vacuum cleaners are becoming increasingly popular in homes, as too are toys that incorporate artificial intelligence. And the creation of robots that are able to interact with people opens the door to robotic home helpers and caregivers.

“In the future people may all be waited on by robots in their old age,” Wyatt says.

Media Contact

All latest news from the category: Information Technology

Here you can find a summary of innovations in the fields of information and data processing and up-to-date developments on IT equipment and hardware.

This area covers topics such as IT services, IT architectures, IT management and telecommunications.

Newest articles

Superradiant atoms could push the boundaries of how precisely time can be measured

Superradiant atoms can help us measure time more precisely than ever. In a new study, researchers from the University of Copenhagen present a new method for measuring the time interval,…

Ion thermoelectric conversion devices for near room temperature

The electrode sheet of the thermoelectric device consists of ionic hydrogel, which is sandwiched between the electrodes to form, and the Prussian blue on the electrode undergoes a redox reaction…

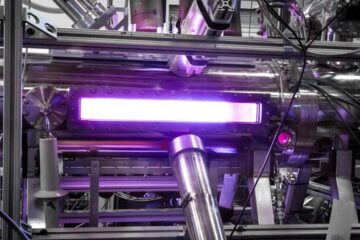

Zap Energy achieves 37-million-degree temperatures in a compact device

New publication reports record electron temperatures for a small-scale, sheared-flow-stabilized Z-pinch fusion device. In the nine decades since humans first produced fusion reactions, only a few fusion technologies have demonstrated…