The Coming Revolution in Artificial Intelligence

Now, computer scientist Hava Siegelmann of the University of Massachusetts Amherst, an expert in neural networks, has taken Turing’s work to its next logical step. She is translating her 1993 discovery of what she has dubbed “Super-Turing” computation into an adaptable computational system that learns and evolves, using input from the environment in a way much more like our brains do than classic Turing-type computers. She and her post-doctoral research colleague Jeremie Cabessa report on the advance in the current issue of Neural Computation.

“This model is inspired by the brain,” she says. “It is a mathematical formulation of the brain’s neural networks with their adaptive abilities.” The authors show that when the model is installed in an environment offering constant sensory stimuli like the real world, and when all stimulus-response pairs are considered over the machine’s lifetime, the Super Turing model yields an exponentially greater repertoire of behaviors than the classical computer or Turing model. They demonstrate that the Super-Turing model is superior for human-like tasks and learning.

“Each time a Super-Turing machine gets input it literally becomes a different machine,” Siegelmann says. “You don’t want this for your PC. They are fine and fast calculators and we need them to do that. But if you want a robot to accompany a blind person to the grocery store, you’d like one that can navigate in a dynamic environment. If you want a machine to interact successfully with a human partner, you’d like one that can adapt to idiosyncratic speech, recognize facial patterns and allow interactions between partners to evolve just like we do. That’s what this model can offer.”

Classical computers work sequentially and can only operate in the very orchestrated, specific environments for which they were programmed. They can look intelligent if they’ve been told what to expect and how to respond, Siegelmann says. But they can’t take in new information or use it to improve problem-solving, provide richer alternatives or perform other higher-intelligence tasks.

In 1948, Turing himself predicted another kind of computation that would mimic life itself, but he died without developing his concept of a machine that could use what he called “adaptive inference.” In 1993, Siegelmann, then at Rutgers, showed independently in her doctoral thesis that a very different kind of computation, vastly different from the “calculating computer” model and more like Turing’s prediction of life-like intelligence, was possible. She published her findings in Science and in a book shortly after.

“I was young enough to be curious, wanting to understand why the Turing model looked really strong,” she recalls. “I tried to prove the conjecture that neural networks are very weak and instead found that some of the early work was faulty. I was surprised to find out via mathematical analysis that the neural models had some capabilities that surpass the Turing model. So I re-read Turing and found that he believed there would be an adaptive model that was stronger based on continuous calculations.”

Each step in Siegelmann’s model starts with a new Turing machine that computes once and then adapts. The size of the set of natural numbers is represented by the notation aleph-zero, representing also the number of different infinite calculations possible by classical Turing machines in a real-world environment on continuously arriving inputs. By contrast, Siegelmann’s most recent analysis demonstrates that Super-Turing computation has 2 to the power aleph-zero, possible behaviors. “If the Turing machine had 300 behaviors, the Super-Turing would have 2300, more than the number of atoms in the observable universe,” she explains.

The new Super-Turing machine will not only be flexible and adaptable but economical. This means that when presented with a visual problem, for example, it will act more like our human brains and choose salient features in the environment on which to focus, rather than using its power to visually sample the entire scene as a camera does. This economy of effort, using only as much attention as needed, is another hallmark of high artificial intelligence, Siegelmann says.

“If a Turing machine is like a train on a fixed track, a Super-Turing machine is like an airplane. It can haul a heavy load, but also move in endless directions and vary its destination as needed. The Super-Turing framework allows a stimulus to actually change the computer at each computational step, behaving in a way much closer to that of the constantly adapting and evolving brain,” she adds.

Siegelmann and two colleagues recently were notified that they will receive a grant to make the first ever Super-Turing computer, based on Analog Recurrent Neural Networks. The device is expected to introduce a level of intelligence not seen before in artificial computation.

Hava Siegelmann

413/577-4282

hava@cs.umass.edu

Media Contact

More Information:

http://www.umass.eduAll latest news from the category: Information Technology

Here you can find a summary of innovations in the fields of information and data processing and up-to-date developments on IT equipment and hardware.

This area covers topics such as IT services, IT architectures, IT management and telecommunications.

Newest articles

Superradiant atoms could push the boundaries of how precisely time can be measured

Superradiant atoms can help us measure time more precisely than ever. In a new study, researchers from the University of Copenhagen present a new method for measuring the time interval,…

Ion thermoelectric conversion devices for near room temperature

The electrode sheet of the thermoelectric device consists of ionic hydrogel, which is sandwiched between the electrodes to form, and the Prussian blue on the electrode undergoes a redox reaction…

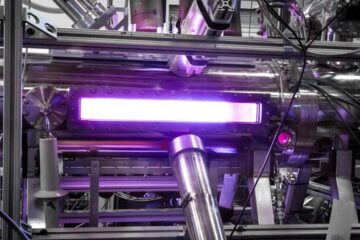

Zap Energy achieves 37-million-degree temperatures in a compact device

New publication reports record electron temperatures for a small-scale, sheared-flow-stabilized Z-pinch fusion device. In the nine decades since humans first produced fusion reactions, only a few fusion technologies have demonstrated…