New Techniques Will Control Heat in Data Centers

Instead, that electricity must be used for cooling the servers, a demand that continues to increase as computer processing power grows.

And the trend toward cloud computing will expand the need for both servers and cooling.

At the Georgia Institute of Technology, researchers are using a 1,100-square-foot simulated data center to optimize cooling strategies and develop new heat transfer models that can be used by the designers of future facilities and equipment. The goal is to reduce the portion of electricity used to cool data center equipment by as much as 15 percent.

“Computers convert electricity to heat as they operate,” said Yogendra Joshi, a professor in Georgia Tech’s Woodruff School of Mechanical Engineering. “As they switch on and off, transistors produce heat, and all of that heat must be ultimately transferred to the environment. If you are looking at a few computers, the heat produced is not that much. But data centers generate heat at the rate of tens of megawatts that must be removed.”

Summaries of the research have been published in the Journal of Electronic Packaging and International Journal of Heat and Mass Transfer and presented at the Second International Conference on Thermal Issues in Emerging Technologies, Theory and Applications. The research has been sponsored by the U.S. Office of Naval Research, and by the Consortium for Energy Efficient Thermal Management.

Five years ago, a typical refrigerator-sized server cabinet produced about one to five kilowatts of heat. Today, high-performance computing cabinets of about the same size produce as much as 28 kilowatts, and machines already planned for production will produce twice as much.

“Some people have called this the Moore’s Law of data centers,” observed Joshi, who is also the John M. McKenney and Warren D. Shiver Chair in the School of Mechanical Engineering. “The growth of cooling requirements parallels the growth of computing power, which roughly doubles every 18 months. That has brought the energy requirements of data centers into the forefront.”

Most existing data centers rely on large air conditioning systems that pump cool air to server racks. Data centers have traditionally used raised floors to allow space for circulating air beneath the equipment, but cooling can also come from the ceilings. As cooling demands have increased, data center designers have developed complex systems of alternating cooling outlets and hot air returns throughout the facilities.

“How these are arranged is very important to how much cooling power will be required,” Joshi said. “There are ways to rearrange equipment within data centers to promote better air flow and greater energy efficiency, and we are exploring ways to improve those.”

Before long, centers will likely have to use liquid cooling to replace chilled air in certain high-powered machines. That will introduce a new level of complexity for the data centers, and create differential cooling needs that will have to be accounted for in the design and maintenance.

Joshi and his students have assembled a small high-power-density data center on the Georgia Tech campus that includes different types of cooling systems, partitions to change room volumes and both real and simulated server racks. They use fog generators and lasers to visualize air flow patterns, infrared sensors to quantify heat, airflow sensors to measure the output of fans and other systems, and sophisticated thermometers to measure temperatures on server motherboards.

Beyond studying the effects of alternate airflow patterns, they are also verifying that cooling systems are doing what they’re supposed to do.

Because tasks are dynamically assigned to specific machines, heat generation varies in a data center. Joshi’s group is also exploring algorithms that could help even out the computing load by assigning new computationally-intensive tasks to cooler machines, avoiding hot spots.

Another issue they’re studying is what happens when utility-system power to a data center is cut off. The servers themselves continue to operate because they receive electricity from an uninterruptible power supply. But the cooling equipment is powered by backup generators, which can take minutes to get up to speed.

During the brief time without cooling, heat builds up in the servers. Existing computer models predict that temperatures will reach dangerous levels in a matter of seconds, but actual measurements done by Joshi’s graduate students show that the equipment can run for as much as six minutes without cooling.

“We’re developing models for different parts of the data center to learn how they respond to changes in temperature,” said Shawn Shields, a former graduate student in Joshi’s lab. “Existing models consider that temperature changes across a server rack will be instantaneous, but we’ve found that it takes quite a relatively long time for the server to reach a steady state.”

Beyond reducing cooling load, the researchers are also looking at how waste heat from data centers can be used. The problem is that the heat is at relatively low temperatures, which makes it inefficient to convert to other forms of energy. Options may include heating nearby buildings or pre-heating water, Joshi said.

Data obtained by the researchers with thermometers and airflow meters is being used to validate computer models that are reasonably accurate, but run rapidly. In the future, these models will help data center operators do a better job of optimizing cooling in real time, he said.

Joshi believes there’s potential to reduce data center energy consumption by as much as 15 percent by adopting more efficient cooling techniques like those under development in his lab.

“Our data center laboratory is a complete sandbox in which we can study all sorts of options without affecting anybody’s computing projects,” he added. “We can look at interesting ways to improve rack-level cooling, liquid cooling and thermoelectric cooling.”

Media Contact

More Information:

http://www.gatech.eduAll latest news from the category: Power and Electrical Engineering

This topic covers issues related to energy generation, conversion, transportation and consumption and how the industry is addressing the challenge of energy efficiency in general.

innovations-report provides in-depth and informative reports and articles on subjects ranging from wind energy, fuel cell technology, solar energy, geothermal energy, petroleum, gas, nuclear engineering, alternative energy and energy efficiency to fusion, hydrogen and superconductor technologies.

Newest articles

Silicon Carbide Innovation Alliance to drive industrial-scale semiconductor work

Known for its ability to withstand extreme environments and high voltages, silicon carbide (SiC) is a semiconducting material made up of silicon and carbon atoms arranged into crystals that is…

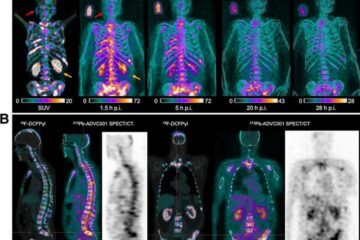

New SPECT/CT technique shows impressive biomarker identification

…offers increased access for prostate cancer patients. A novel SPECT/CT acquisition method can accurately detect radiopharmaceutical biodistribution in a convenient manner for prostate cancer patients, opening the door for more…

How 3D printers can give robots a soft touch

Soft skin coverings and touch sensors have emerged as a promising feature for robots that are both safer and more intuitive for human interaction, but they are expensive and difficult…