Inexpensive 'nano-camera' can operate at the speed of light

A $500 “nano-camera” that can operate at the speed of light has been developed by researchers in the MIT Media Lab.

The three-dimensional camera, which was presented last week at Siggraph Asia in Hong Kong, could be used in medical imaging and collision-avoidance detectors for cars, and to improve the accuracy of motion tracking and gesture-recognition devices used in interactive gaming.

The camera is based on “Time of Flight” technology like that used in Microsoft's recently launched second-generation Kinect device, in which the location of objects is calculated by how long it takes a light signal to reflect off a surface and return to the sensor. However, unlike existing devices based on this technology, the new camera is not fooled by rain, fog, or even translucent objects, says co-author Achuta Kadambi, a graduate student at MIT.

“Using the current state of the art, such as the new Kinect, you cannot capture translucent objects in 3-D,” Kadambi says. “That is because the light that bounces off the transparent object and the background smear into one pixel on the camera. Using our technique you can generate 3-D models of translucent or near-transparent objects.”

In a conventional Time of Flight camera, a light signal is fired at a scene, where it bounces off an object and returns to strike the pixel. Since the speed of light is known, it is then simple for the camera to calculate the distance the signal has travelled and therefore the depth of the object it has been reflected from.

Unfortunately though, changing environmental conditions, semitransparent surfaces, edges, or motion all create multiple reflections that mix with the original signal and return to the camera, making it difficult to determine which is the correct measurement.

Instead, the new device uses an encoding technique commonly used in the telecommunications industry to calculate the distance a signal has travelled, says Ramesh Raskar, an associate professor of media arts and sciences and leader of the Camera Culture group within the Media Lab, who developed the method alongside Kadambi, Refael Whyte, Ayush Bhandari, and Christopher Barsi at MIT and Adrian Dorrington and Lee Streeter from the University of Waikato in New Zealand.

“We use a new method that allows us to encode information in time,” Raskar says. “So when the data comes back, we can do calculations that are very common in the telecommunications world, to estimate different distances from the single signal.”

The idea is similar to existing techniques that clear blurring in photographs, says Bhandari, a graduate student in the Media Lab. “People with shaky hands tend to take blurry photographs with their cellphones because several shifted versions of the scene smear together,” Bhandari says. “By placing some assumptions on the model — for example that much of this blurring was caused by a jittery hand — the image can be unsmeared to produce a sharper picture.”

The new model, which the team has dubbed nanophotography, unsmears the individual optical paths.

In 2011 Raskar's group unveiled a trillion-frame-per-second camera capable of capturing a single pulse of light as it travelled through a scene. The camera does this by probing the scene with a femtosecond impulse of light, then uses fast but expensive laboratory-grade optical equipment to take an image each time. However, this “femto-camera” costs around $500,000 to build.

In contrast, the new “nano-camera” probes the scene with a continuous-wave signal that oscillates at nanosecond periods. This allows the team to use inexpensive hardware — off-the-shelf light-emitting diodes (LEDs) can strobe at nanosecond periods, for example — meaning the camera can reach a time resolution within one order of magnitude of femtophotography while costing just $500.

“By solving the multipath problem, essentially just by changing the code, we are able to unmix the light paths and therefore visualize light moving across the scene,” Kadambi says. “So we are able to get similar results to the $500,000 camera, albeit of slightly lower quality, for just $500.”

Media Contact

More Information:

http://www.mit.eduAll latest news from the category: Power and Electrical Engineering

This topic covers issues related to energy generation, conversion, transportation and consumption and how the industry is addressing the challenge of energy efficiency in general.

innovations-report provides in-depth and informative reports and articles on subjects ranging from wind energy, fuel cell technology, solar energy, geothermal energy, petroleum, gas, nuclear engineering, alternative energy and energy efficiency to fusion, hydrogen and superconductor technologies.

Newest articles

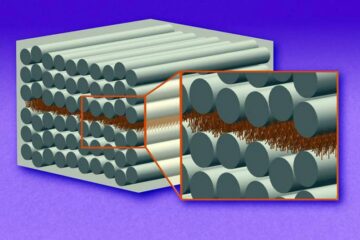

“Nanostitches” enable lighter and tougher composite materials

In research that may lead to next-generation airplanes and spacecraft, MIT engineers used carbon nanotubes to prevent cracking in multilayered composites. To save on fuel and reduce aircraft emissions, engineers…

Trash to treasure

Researchers turn metal waste into catalyst for hydrogen. Scientists have found a way to transform metal waste into a highly efficient catalyst to make hydrogen from water, a discovery that…

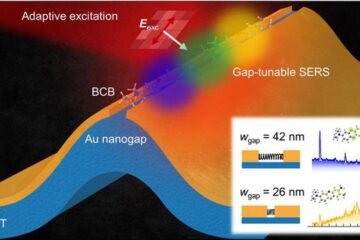

Real-time detection of infectious disease viruses

… by searching for molecular fingerprinting. A research team consisting of Professor Kyoung-Duck Park and Taeyoung Moon and Huitae Joo, PhD candidates, from the Department of Physics at Pohang University…