New study casts doubt on validity of standard earthquake-prediction model

A new study by Stanford University geophysicists is raising serious questions about a fundamental technique used to make long-range earthquake predictions.

Writing in the journal Nature, geophysicists Jessica Murray and Paul Segall show how a widely used earthquake model failed to predict when a long-anticipated magnitude 6 quake would strike the San Andreas Fault in Central California.

In their Sept. 19 Nature study, Murray and Segall analyzed the “time-predictable recurrence model” – a technique for estimating the time when an earthquake will occur. This model is used to calculate the probability of future earthquakes.

Developed by Japanese geophysicists K. Shimazaki and T. Nakata in 1980, the time-predictable model has become a standard tool for hazard prediction in many earthquake-prone regions – including the United States, Japan and New Zealand.

Strain build-up

The time-predictable model is based on the theory that earthquakes in fault zones are caused by the constant build-up and release of strain in the Earth’s crust.

“With a plate boundary like the San Andreas, you have the North American plate on one side and the Pacific plate on the other,” explained Segall, a professor of geophysics. “The two plates are moving at a very steady rate with respect to one another, so strain is being put into the system at an essentially constant rate.”

When an earthquake occurs on the fault, a certain amount of accumulated strain is released, added Murray, a geophysics graduate student.

“Following the quake, strain builds up again because of the continuous grinding of the tectonic plates,” she noted. “According to the time-predictable model, if you know the size of the most recent earthquake and the rate of strain accumulation afterwards, you should be able to forecast the time that the next event will happen simply by dividing the strain released by the strain-accumulation rate.”

Parkfield, Calif.

Although the model makes sense on paper, Murray and Segall wanted to put it to the test using long-term data collected in an ideal setting. Their choice was Parkfield – a tiny town in Central California midway between San Francisco and Los Angeles. Perched along the San Andreas Fault, Parkfield has been rocked by a magnitude 6 earthquake every 22 years on average since 1857. The last one struck in 1966, and geologists have been collecting earthquake data there ever since.

“Parkfield is a good place to test the model because we have measurements of surface ground motion during the 1966 earthquake and of the strain that’s been accumulating since,” Murray noted. “It’s also located in a fairly simple part of the San Andreas system because it’s on the main strand of the fault and doesn’t have other parallel faults running nearby.”

When Murray and Segall applied the time-predictable model to the Parkfield data, they came up with a forecast of when the next earthquake would occur.

“According to the model, a magnitude 6 earthquake should have taken place between 1973 and 1987 – but it didn’t,” Murray said. “In fact, 15 years have gone by. Our results show, with 95 percent confidence, that it should definitely have happened before now, and it hasn’t, so that shows that the model doesn’t work – at least in this location.”

Could the time-predictable method work in other parts of the fault, including the densely populated metropolitan areas of Northern and Southern California? The researchers have their doubts,

“We used the model at Parkfield where things are fairly simple,” Murray observed, “but when you come to the Bay Area or Los Angeles, there are a lot more fault interactions, so it’s probably even less likely to work in those places.”

Segall agreed: “I have to say, in my heart, I believe this model is too simplistic. It’s really not likely to work elsewhere, either, but we still should test it at other sites. Lots of people do these kinds of calculations. What Jessica has done, however, is to be extremely careful. She really bent over backwards to try to understand what the uncertainties of these kinds of calculations are – consulting with our colleagues in the Stanford Statistics Department just to make sure that this was done as carefully and precisely as anybody knows how to do. So we feel quite confident that there’s no way to fudge out of this by saying there are uncertainties in the data or in the method.”

Use with caution

Segall pointed out that government agencies in a number of Pacific Rim countries routinely use this technique for long-range hazard assessments.

For example, the U.S. Geological Survey (USGS) relied on the time-predictable model and two other models in its widely publicized 1999 report projecting a 70-percent probability of a large quake striking the San Francisco Bay Area by 2030.

“We’re in a tough situation, because agencies like the USGS – which have the responsibility for issuing forecasts so that city planners and builders can use the best available knowledge – have to do the best they can with what information they have.” Segall observed. “The message I would send to my geophysical colleagues about this model is, ’Use with caution.’”

Technological advances in earthquake science could make long-range forecasting a reality one day, added Murray, pointing to the recently launched San Andreas Fault drilling experiment in Parkfield under the aegis of USGS and Stanford.

In the mean time, people living in earthquake-prone regions should plan for the inevitable.

“I always tell people to prepare,” Segall concluded. “We know big earthquakes have happened in the past, we know they will happen again. We just don’t know when.”

Media Contact

All latest news from the category: Earth Sciences

Earth Sciences (also referred to as Geosciences), which deals with basic issues surrounding our planet, plays a vital role in the area of energy and raw materials supply.

Earth Sciences comprises subjects such as geology, geography, geological informatics, paleontology, mineralogy, petrography, crystallography, geophysics, geodesy, glaciology, cartography, photogrammetry, meteorology and seismology, early-warning systems, earthquake research and polar research.

Newest articles

High-energy-density aqueous battery based on halogen multi-electron transfer

Traditional non-aqueous lithium-ion batteries have a high energy density, but their safety is compromised due to the flammable organic electrolytes they utilize. Aqueous batteries use water as the solvent for…

First-ever combined heart pump and pig kidney transplant

…gives new hope to patient with terminal illness. Surgeons at NYU Langone Health performed the first-ever combined mechanical heart pump and gene-edited pig kidney transplant surgery in a 54-year-old woman…

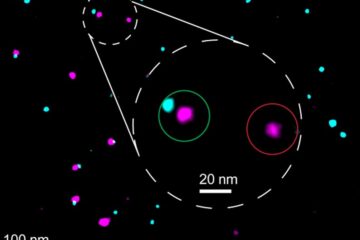

Biophysics: Testing how well biomarkers work

LMU researchers have developed a method to determine how reliably target proteins can be labeled using super-resolution fluorescence microscopy. Modern microscopy techniques make it possible to examine the inner workings…