Supercomputer Unleashes Virtual 9.0 Megaquake in Pacific Northwest

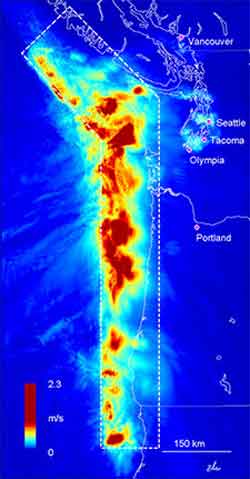

Virtual 9.0 Earthquake<br>Shakes Pacific Northwest<br><br>Scientists used a supercomputer-driven “virtual earthquake” to explore likely ground shaking in a magnitude 9.0 megathrust earthquake in the Pacific Northwest. Peak ground velocities are displayed in yellow and red. The legend represents speed in meters per second (m/s) with red equaling 2.3 m/s. Although the largest ground motions occur offshore near the fault and decrease eastward, sedimentary basins lying beneath some cities amplify the shaking in Seattle, Tacoma, Olympia, and Vancouver, increasing the risk of damage. Credit: Kim Olsen, SDSU.

On January 26, 1700, at about 9 p.m. local time, the Juan de Fuca plate beneath the ocean in the Pacific Northwest suddenly moved, slipping some 60 feet eastward beneath the North American plate in a monster quake of approximately magnitude 9, setting in motion large tsunamis that struck the coast of North America and traveled to the shores of Japan.

Since then, the earth beneath the region – which includes the cities of Vancouver, Seattle and Portland — has been relatively quiet. But scientists believe that earthquakes with magnitudes greater than 8, so-called “megathrust events,” occur along this fault on average every 400 to 500 years.

To help prepare for the next megathrust earthquake, a team of researchers led by seismologist Kim Olsen of San Diego State University (SDSU) used a supercomputer-powered “virtual earthquake” program to calculate for the first time realistic three-dimensional simulations that describe the possible impacts of megathrust quakes on the Pacific Northwest region. Also participating in the study were researchers from the San Diego Supercomputer Center at UC San Diego and the U.S. Geological Survey.

What the scientists learned from this simulation is not reassuring, as reported in the Journal of Seismology, particularly for residents of downtown Seattle.

With a rupture scenario beginning in the north and propagating toward the south along the 600-mile long Cascadia Subduction Zone, the ground moved about 1 ½ feet per second in Seattle; nearly 6 inches per second in Tacoma, Olympia and Vancouver; and 3 inches in Portland, Oregon. Additional simulations, especially of earthquakes that begin in the southern part of the rupture zone, suggest that the ground motion under some conditions can be up to twice as large.

“We also found that these high ground velocities were accompanied by significant low-frequency shaking, like what you feel in a roller coaster, that lasted as long as five minutes – and that’s a long time,” said Olsen.

The long-duration shaking, combined with high ground velocities, raises the possibility that such an earthquake could inflict major damage on metropolitan areas — especially on high-rise buildings in downtown Seattle. Compounding the risks, like Los Angeles to the south, Seattle, Tacoma, and Olympia sit on top of sediment-filled geological basins that are prone to greatly amplifying the waves generated by major earthquakes.

“One thing these studies will hopefully do is to raise awareness of the possibility of megathrust earthquakes happening at any given time in the Pacific Northwest,” said Olsen. “Because these events will tend to occur several hundred kilometers from major cities, the study also implies that the region could benefit from an early warning system that can allow time for protective actions before the brunt of the shaking starts.” Depending on how far the earthquake is from a city, early warning systems could give from a few seconds to a few tens of seconds to implement measures, such as automatically stopping trains and elevators.

Added Olsen, “The information from these simulations can also play a role in research into the hazards posed by large tsunamis, which can originate from such megathrust earthquakes like the ones generated in the 2004 Sumatra-Andeman earthquake in Indonesia.” One of the largest earthquakes ever recorded, the magnitude 9.2 Sumatra-Andeman event was felt as far away as Bangladesh, India, and Malaysia, and triggered devastating tsunamis that killed more than 200,000 people.

In addition to increasing scientific understanding of these massive earthquakes, the results of the simulations can also be used to guide emergency planners, to improve building codes, and help engineers design safer structures — potentially saving lives and property in this region of some 9 million people.

Even with the large supercomputing and data resources at SDSC, creating “virtual earthquakes” is a daunting task. The computations to prepare initial conditions were carried out on SDSC’s DataStar supercomputer, and then the resulting information was transferred for the main simulations to the center’s Blue Gene Data supercomputer via SDSC’s advanced virtual file system or GPFS-WAN, which makes data seamlessly available on different – sometimes distant – supercomputers.

Coordinating the simulations required a complex choreography of moving information into and out of the supercomputer as Olsen’s sophisticated “Anelastic Wave Model” simulation code was running. Completing just one of several simulations, running on 2,000 supercomputer processors, required some 80,000 processor hours – equal to running one program continuously on your PC for more than 9 years!

“To solve the new challenges that arise when researchers need to run their codes at the largest scales, and data sets grow to great size, we worked closely with the earthquake scientists through several years of code optimization and modifications,” said SDSC computational scientist Yifeng Cui, who contributed numerous refinements to allow the computer model to “scale up” to capture a magnitude 9 earthquake over such a vast area.

In order to run the simulations, the scientists must recreate in their model the components that encompass all the important aspects of the earthquake. One component is an accurate representation of the earth’s subsurface layering, and how its structure will bend, reflect, and change the size and direction of the traveling earthquake waves. Co-author William Stephenson of the USGS worked with Olsen and Andreas Geisselmeyer, from Ulm University in Germany, to create the first unified “velocity model” of the layering for this entire region, extending from British Columbia to Northern California.

Another component is a model of the earthquake source from the slipping of the Juan de Fuca plate underneath the North American plate. Making use of the extensive measurements of the massive 2004 Sumatra-Andeman earthquake in Indonesia, the scientists developed a model of the earthquake source for similar megathrust earthquakes in the Pacific Northwest.

The sheer physical size of the region in the study was also challenging. The scientists included in their virtual model an immense slab of the earth more than 650 miles long by 340 miles by 30 miles deep — more than 7 million cubic miles — and used a computer mesh spacing of 250 meters to divide the volume into some 2 billion cubes. This mesh size allows the simulations to model frequencies up to 0.5 Hertz, which especially affect tall buildings.

“One of the strengths of an earthquake simulation model is that it lets us run scenarios of different earthquakes to explore how they may affect ground motion,” said Olsen. Because the accumulated stresses or “slip deficit” can be released in either one large event or several smaller events, the scientists ran scenarios for earthquakes of different sizes.

“We found that the magnitude 9 scenarios generate peak ground velocities five to 10 times larger than those from the smaller magnitude 8.5 quakes.”

The researchers are planning to conduct additional simulations to explore the range of impacts that depend on where the earthquake starts, the direction of travel of the rupture along the fault, and other factors that can vary.

This research was supported by the National Science Foundation, the U.S. Geological Survey, the Southern California Earthquake Center, and computing time on an NSF supercomputer at SDSC.

Media Contact

More Information:

http://www.sdsc.eduAll latest news from the category: Earth Sciences

Earth Sciences (also referred to as Geosciences), which deals with basic issues surrounding our planet, plays a vital role in the area of energy and raw materials supply.

Earth Sciences comprises subjects such as geology, geography, geological informatics, paleontology, mineralogy, petrography, crystallography, geophysics, geodesy, glaciology, cartography, photogrammetry, meteorology and seismology, early-warning systems, earthquake research and polar research.

Newest articles

High-energy-density aqueous battery based on halogen multi-electron transfer

Traditional non-aqueous lithium-ion batteries have a high energy density, but their safety is compromised due to the flammable organic electrolytes they utilize. Aqueous batteries use water as the solvent for…

First-ever combined heart pump and pig kidney transplant

…gives new hope to patient with terminal illness. Surgeons at NYU Langone Health performed the first-ever combined mechanical heart pump and gene-edited pig kidney transplant surgery in a 54-year-old woman…

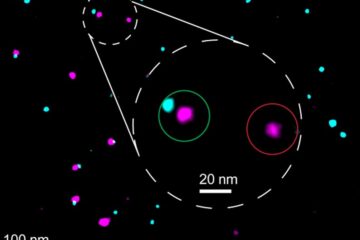

Biophysics: Testing how well biomarkers work

LMU researchers have developed a method to determine how reliably target proteins can be labeled using super-resolution fluorescence microscopy. Modern microscopy techniques make it possible to examine the inner workings…