Engineering graduate student narrows gap between high-resolution video and virtual reality

University of California at San Diego grad student Han Suk Kim is trying to narrow that performance gap so that VEs can one day be used for high-resolution video conferencing, video surveillance or even in virtual movie theaters. Kim, a computer science and engineering Ph.D. student at the Jacobs School of Engineering, has developed an efficient “mipmap” algorithm that “shrinks” high-resolution video content so that it can be played interactively in VEs. He has also created several optimization solutions for sustaining a stable video playback frame rate, even when the video is projected onto non-rectangular VE screens.

Kim will showcase his work during the student poster session at the Jacobs School of Engineering's Research Expo on Thursday, Feb. 19. Kim's research will be one of 240 grad student projects presented at Research Expo. For a sneak peak of some of Research Expo’s hottest student posters click here. Research Expo also includes technical breakout sessions by Jacobs School faculty, as well as a luncheon featuring keynote speaker Christopher Scolese, NASA’s acting administrator.

According to Jürgen Schulze – Kim's advisor and a project scientist at the UC San Diego division of the California Institute for Telecommunications and Information Technology (Calit2) – the algorithm that Kim has developed will make it possible to display super high-resolution 4K video in Calit2’s virtual auditorium.

“This ability does not only add more realism to architectural models, but it also allows us to use the super high resolution environments to their capability, for instance, in Calit2’s virtual reality StarCAVE, which can display 34 million pixels,” Schulze said. “Another feature of Kim’s algorithm is that dozens of video streams can be displayed concurrently, in different places of the virtual environment, with a much smaller impact on overall rendering performance than previously possible.”

Kim derived his algorithm from a technique called “mipmapping,” which is widely used by computer graphics experts to design computer games, flight simulations and other 3D imaging systems. The term itself is indicative of its approach – the letters “MIP” are short for the Latin phrase multum in parvo, which means “much in a small space.” By using mipmap to reduce the level of detail (LOD) and downscale the size of high-resolution media datasets (like Calit2's 4K video of a tornado simulation, which numbers almost 45 gigabytes in volume), Kim was able to stream the video in real-time at 25 frames per second. By adding various optimizations to allow for constant frame and rendering rates, Kim was able to rotate, zoom in and otherwise manipulate the video playback screen to make the experience fully interactive.

“In interactive computer graphic applications, each frame takes a different time to render, depending on many different factors, such as the amount of data to be loaded, the size of the rendered screen and cache effect in various places of a computer system,” Kim explained. “One of the ideas behind my work is to apply the mipmap approach for multiple frames of video data. The algorithm I developed automatically calculates the correct LOD mipmap scaling depending on the distance away from the eye and the area of each tile shown on screen. We then use the processed data for playback. So I'm not really editing the video. I'm only touching it in terms of resolution.”

Although mipmap is an efficient method for coping with large datasets, there has to be a good playback system to support it, Kim said.

“Our approach reduces the memory required to display high-resolution images, depending on distance and visual perspective,” he said. “If the area is big and close to the viewer's face, the video is streamed at a high-resolution; if it’s small and far away from the viewer's face, it's streamed at a low-resolution.

“Another issue is how to read a lot of data efficiently,” Kim added. “If I want to render a particular frame in the VE, the data has to already be in the system and I have to read it from memory and copy it again to the graphics processor. For the VE, we used pre-fetching to read the data in advance, and to speed up the reading process we used synchronous input-output. If I want to play the video with audio, it has to be synchronized with the sounds, so it has to be played at the correct speed. This means I have to measure the speed of playing, and if it’s too slow, I have to jump a couple of frames.”

Kim's research has implications for another important field of research: Biomedicine. UC San Diego’s National Center for Microscopy and Imaging Research (NCMIR) has enlisted Kim to help visualize large datasets culled from brain cells – research that might ultimately play a part in the digital “Whole Brain Catalog” envisioned by UC San Diego’s Mark Ellisman, a professor of neuroscience and bioengineering.

“Visualizing cellular datasets and maximizing high-resolution video playback share similar problems,” Kim said. “If you have 10 gigs of data but only 2 gigs of memory, how do you achieve that? NCMIR has a lot of datasets that are usually made up of very high-resolution microscope images, and all the optimization solutions that we used in video playback can also be applied to the rendering system of light microscope and electron microscope data. The next step would be something called ‘transfer function design,’ which adds color to the 3D datasets so scientists can compare them.”

Kim will also publish his preliminary research results as a poster at this year’s Institute of Electrical and Electronics Engineer’s (IEEE) Virtual Reality conference in Lafayette, La. March 14-18.

Media Contact

More Information:

http://www.ucsd.eduAll latest news from the category: Communications Media

Engineering and research-driven innovations in the field of communications are addressed here, in addition to business developments in the field of media-wide communications.

innovations-report offers informative reports and articles related to interactive media, media management, digital television, E-business, online advertising and information and communications technologies.

Newest articles

Superradiant atoms could push the boundaries of how precisely time can be measured

Superradiant atoms can help us measure time more precisely than ever. In a new study, researchers from the University of Copenhagen present a new method for measuring the time interval,…

Ion thermoelectric conversion devices for near room temperature

The electrode sheet of the thermoelectric device consists of ionic hydrogel, which is sandwiched between the electrodes to form, and the Prussian blue on the electrode undergoes a redox reaction…

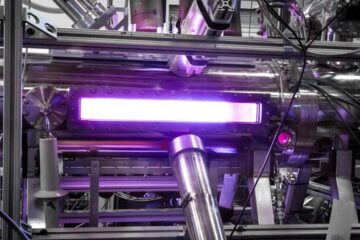

Zap Energy achieves 37-million-degree temperatures in a compact device

New publication reports record electron temperatures for a small-scale, sheared-flow-stabilized Z-pinch fusion device. In the nine decades since humans first produced fusion reactions, only a few fusion technologies have demonstrated…